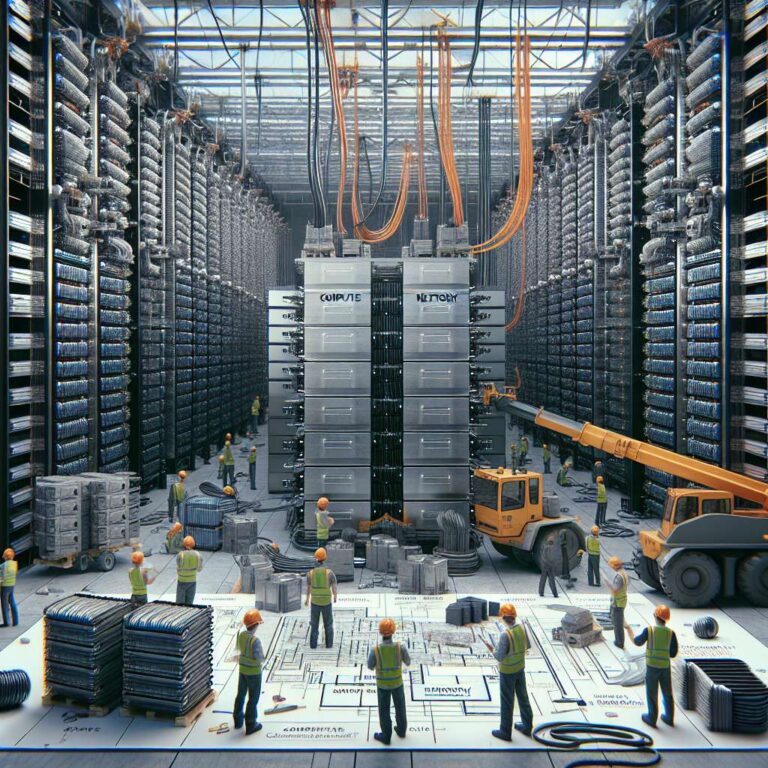

Hyperscalers are escalating spending on specialized Artificial Intelligence chips and are redesigning entire data center stacks to optimize for accelerated computing workloads targeted for 2026. Strategic roadmaps now center on tightly integrating compute, networking, and memory around new generations of Artificial Intelligence accelerators from vendors including Nvidia, AMD, and Intel, with an emphasis on balancing performance, energy efficiency, and total cost of ownership at massive scale.

As demand for larger models and more complex Artificial Intelligence workloads grows, supply chains are pivoting to advanced packaging techniques and high bandwidth memory so that accelerators can be fed data at sufficient rates. Hyperscalers are prioritizing chip designs that can be co-optimized with custom interconnects, on-premise infrastructure, and cloud services, while also planning for future process nodes that will arrive close to 2026. These priorities reflect a shift from general-purpose compute toward highly specialized silicon tailored to training and inference.

Vendors are competing to align their Artificial Intelligence chip roadmaps with hyperscaler requirements across performance, memory capacity, and integration into existing cloud platforms. The top priorities for 2026 deployments include securing reliable supply, adopting advanced packaging and high bandwidth memory, optimizing energy usage at the rack and data center level, and ensuring that Artificial Intelligence chips can be rapidly deployed into evolving software stacks. Collectively, these moves are reshaping data center design and investment patterns as hyperscalers prepare for the next wave of Artificial Intelligence workloads.