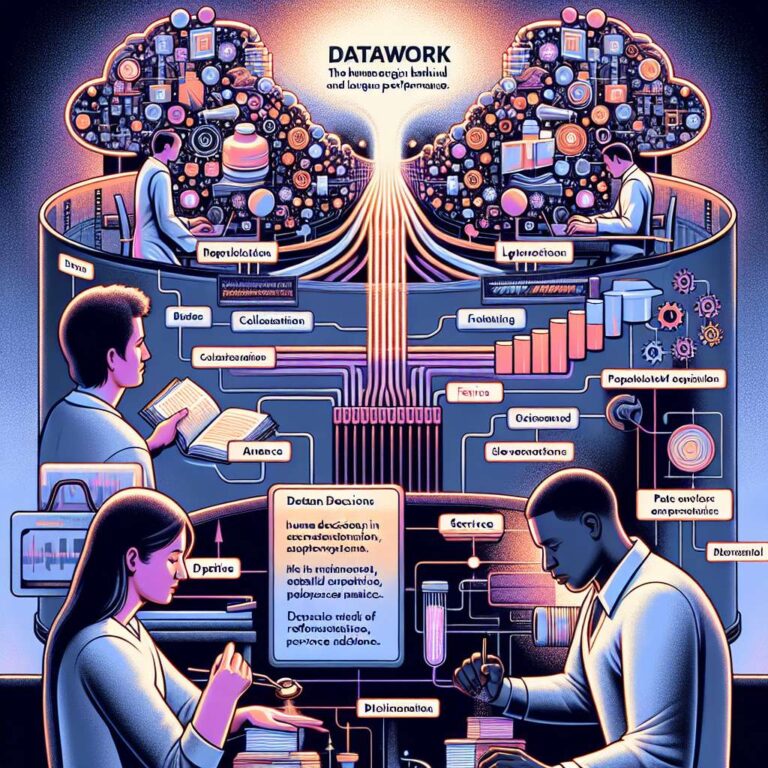

“Datawork is deeply human,” says Adriana Alvarado, staff research scientist at IBM, in her presentation “LLM + Data: Building Artificial Intelligence with Real & Synthetic Data.” She framed data as the engine behind every model, from simple algorithms to the largest Large Language Models. Alvarado stressed that ongoing human decisions about collection, annotation and preparation shape an Artificial Intelligence system’s performance, fairness and utility, and that these details are often obscured by technical narratives.

Alvarado uses the term datawork to describe the lifecycle activities that produce and maintain datasets: collecting, annotating, curating, deploying and iteratively refining data. She cautioned founders and investors that data is not a static commodity but a dynamic, human-shaped resource. Because these choices have downstream effects on model behavior, datawork is a strategic differentiator that is nonetheless undervalued and frequently invisible within broader Artificial Intelligence development processes.

The human element in datawork introduces representational bias. Alvarado noted that many datasets do not represent the world equally, over-representing some regions, languages and perspectives while under-representing others. Those labeling and categorization decisions implicitly decide who is represented. As a result, even advanced Large Language Models trained on skewed data can perpetuate inequalities and perform poorly for underrepresented users or applications, raising ethical and practical risks for product deployments and global markets.

To address scarcity, privacy and gaps, practitioners increasingly explore synthetic data generated by Large Language Models. Alvarado warned that synthetic data is not a cure-all: every synthetically generated dataset requires detailed documentation of seed data, prompts and parameter settings to preserve provenance and enable accountability. She concluded with three forward-looking points: specialized datasets matter, scale alone does not ensure diversity or quality, and dataset categories must reflect real user needs and application conditions. The future of robust, ethical and performant Artificial Intelligence, she argued, depends as much on meticulous datawork as on algorithmic advances.