Businesses are rapidly integrating agentic artificial intelligence into operations using the model context protocol, an emerging standard that lets large language models connect to external tools and data sources. Model context protocol works as an intermediary layer where an artificial intelligence model, acting as a host, embeds a client that connects one-to-one with a server providing functions such as database access, file handling, or web application calls. The model never interacts directly with external systems, but the security burden is shifted to each server and client implementation, many of which were built without strong safeguards. As adoption accelerates, including around 20,000 model context protocol server implementations on GitHub, the security implications are growing more serious.

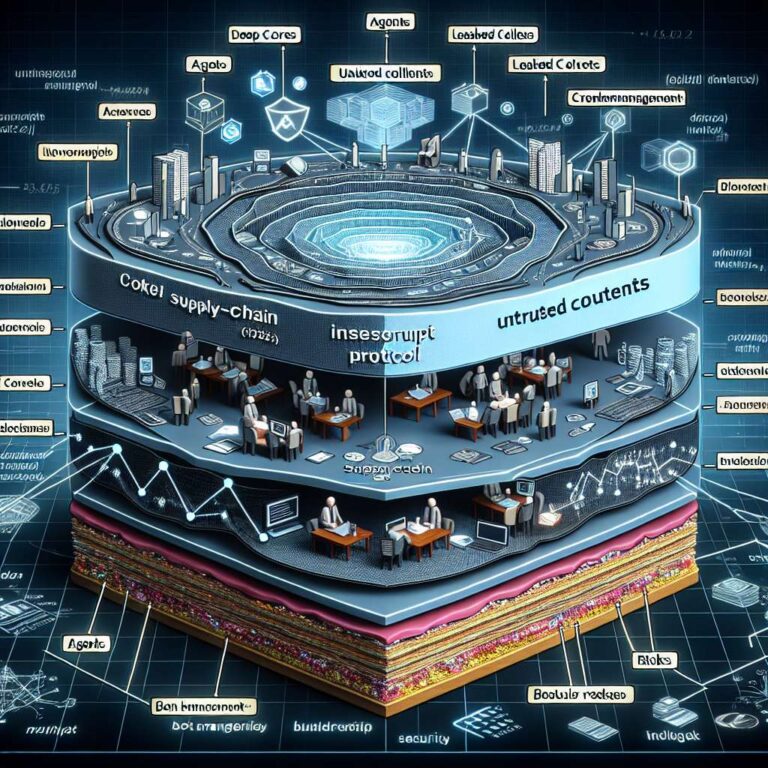

Security researchers point to several primary risks arising from model context protocol deployments. Some early sessions leaked sensitive tokens through URL query strings, and analysis from Red Hat stresses that model context protocol servers are executable code that must be treated as untrusted unless verified, ideally through cryptographic signing. This expands the artificial intelligence attack surface because any flaw in a server or tool definition can mislead a model into harmful actions, whether by accident or through deliberate abuse. Independent research shows AI bot traffic grew 4.5× in 2025, with automated requests now exceeding human browsing behaviour, which further undermines traditional visibility and governance. Detailed studies highlight issues such as supply chain and tool poisoning, where malicious prompts or code are injected into servers or tool metadata, and credential management weaknesses, where Astrix’s large-scale study found that almost 88% of model context protocol servers require credentials, but 53% of them rely on long-lived static API keys or PATs, and only about 8.5% use modern OAuth-based delegation.

Additional threats include over-permissive confused deputy scenarios, since model context protocol does not inherently carry user identity to servers, enabling attackers to trick models into invoking powerful tools on their behalf. Prompt and context injection becomes more sophisticated when attackers covertly poison data sources or files with hidden instructions that are executed when agents retrieve them. The rise of unverified third-party servers for platforms such as GitHub and Slack adds classic supply chain exposure. These issues show that standard API and application controls are insufficient, so purpose-built model context protocol security tools are emerging to monitor agent-to-tool interactions, enforce least privilege, and detect anomalous behaviour at runtime. In parallel, digital businesses face a spike in artificial intelligence driven bot activity, with data from DataDome showing that across its customer base, LLM bots grew from around 2.6% of all bot requests to over 10.1% between January and August 2025.

During peak retail periods, abuse such as credential stuffing, scraping, fake account creation, and inventory scalping intensifies, concentrating attacks on login flows, forms, and checkout pages where credentials and payment data are entered. Large-scale testing indicates that only a small portion of popular sites can reliably block automated abuse, while most cannot even stop basic scripted bots, let alone adaptive artificial intelligence agents that mimic human users. Providers such as DataDome are shifting defenses toward intent-based traffic analysis using behavioral signals to separate malicious automation from legitimate users and approved artificial intelligence agents, and DataDome reports blocking hundreds of billions of bot-driven attacks annually. The combination of model context protocol specific vulnerabilities and surging artificial intelligence automation means organizations now need tighter controls on high-risk entry points, continuous, real-time detection, and artificial intelligence aware protection as model context protocol enabled applications move into the mainstream.