Generative media is emerging as a distinct ecosystem from large language models, with a high degree of model fragmentation and specialization. Enterprise production deployments use a median of 14 different models, reflecting the need to match models to specific tasks such as photorealistic imagery, anime aesthetics, physics simulation, background removal, sound generation, or multi-shot narrative scenes. Unlike the large language model market, where OpenAI, Gemini, and Anthropic together command 89% of enterprise wallet share, generative media lacks a small set of dominant providers. Infrastructure teams are expected to support this diversity by rolling out new models every few weeks and providing day-0 support as release cycles outpace typical enterprise software timelines.

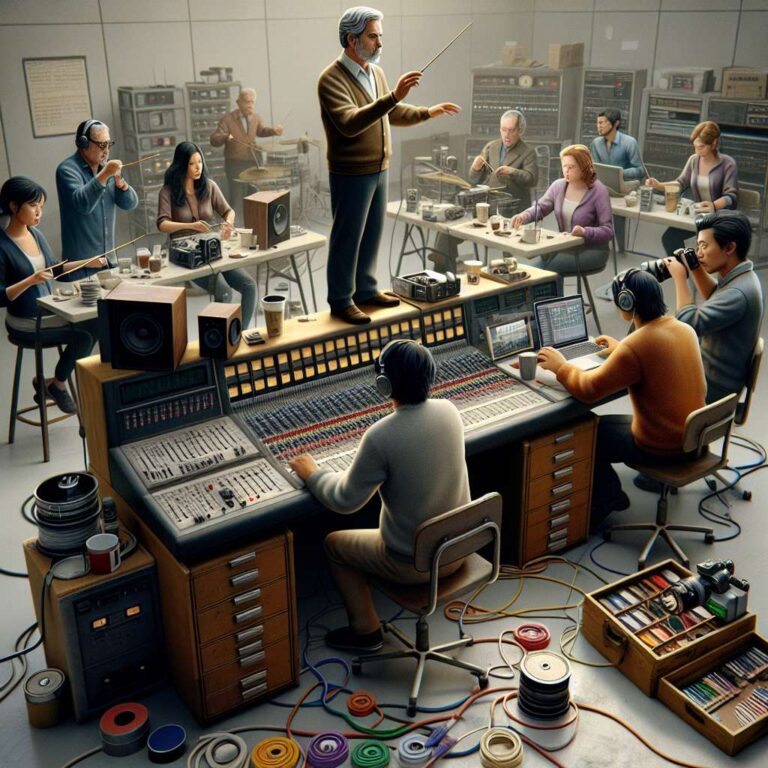

Production workflows in generative media increasingly rely on chaining multiple models instead of a single inference call. Developers commonly generate an image, remove the background, upscale, recolor, and apply a style-consistent LoRA to reach brand-level consistency that one-shot prompting cannot achieve. Long form videos add further complexity, requiring scene generation, camera motion control, character persistence across cuts, dialogue synthesis, sound design, and post-production effects, each powered by specialized models feeding into one another. This makes orchestration as important as raw inference performance, driving demand for unified interfaces across models, workflow primitives to compose multi-step pipelines, streaming of intermediate results, and queue management for long-running jobs so that teams spend less time on integration plumbing and more on product.

Cost and quality tradeoffs are becoming more sophisticated as teams optimize both models and infrastructure. When generating large volumes of small, utilitarian images such as product thumbnails or feed assets, companies favor fast and cheap models like Flux, while hero assets for ad campaigns, logos, or brand imagery justify higher spend on models such as Nano Banana Pro where imperfections are unacceptable. In fal’s survey with Artificial Analysis, 58% of organizations identified cost optimization as their primary criterion when selecting model infrastructure, ahead of model availability and generation speed, indicating competition both among infrastructure providers for efficient runs and among models along the cost-quality frontier. Adoption spans many industries, with gaming, advertising, and e-commerce leading in use of generative tools for prototyping, content variation, and large-scale asset production that replace traditional photography and lengthy post-production cycles.

Advances in video and immersive environments are setting the direction for the next wave of products. Seedance 1.0 topped leaderboards in June 2025, and the previews of Seedance 2.0 are described as significantly more capable, while other models like Kling and Grok are close behind and more output is expected from Sora at OpenAI and Veo at DeepMind. The model releases in 2025 came every 4-6 weeks; there’s no reason to expect that pace to slow down. World models are moving from prototype to product, highlighted by Marble from World Labs, which can generate persistent, interactive 3D environments from a single image or text prompt, and Genie 3 from DeepMind, which pursues real-time video that users can explore like a game. At the same time, enterprises are increasingly choosing open-source generative media models not primarily for cost but for customizability, since finetuning on proprietary data is essential for brand consistency, character persistence, and product fidelity across millions of assets. Closed APIs often restrict this level of control, while open-source models such as Flux and Qwen Image Edit closed the quality gap faster than expected in 2025, reinforcing the shift toward open, customizable infrastructure.