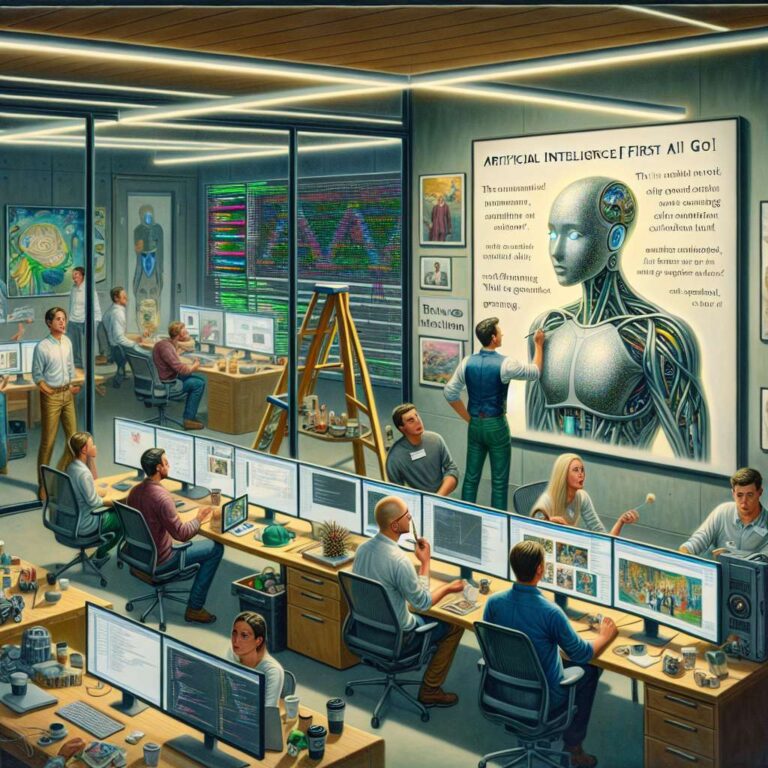

Electronic Arts announced a partnership with Stability AI that it says will help artists iterate faster and develop games more efficiently. A Business Insider report quoted employees who say Electronic Arts has been pushing staff to use Artificial Intelligence tools for at least a year. Sources described a gap between the company messaging about future tools and the experience of using current in-house systems.

Employees familiar with the internal tools told Business Insider that the Artificial Intelligence assistants often hallucinate, producing incorrect or broken code that developers must manually fix. Artists expressed concern that their art was used to train models, a practice they fear could devalue creative work and reduce demand for artists. The report also says about 100 quality assurance staff were recently terminated because the company deployed systems that could review and summarize tester feedback, a change that staff associate with the broader push to automate workflows.

Despite those complaints, Electronic Arts is publicly leaning further into Artificial Intelligence with its Stability AI partnership. The article also notes wider industry movement: publisher Krafton announced a pivot to become an Artificial Intelligence first company and said it will invest in GPU horsepower to support agentic automation, though the report does not specify the exact sum. The piece closes by recalling that investors who recently acquired a stake in Electronic Arts indicated they would rely on Artificial Intelligence to reduce operating costs, a strategy that sources say is already influencing staffing and tool adoption.