A research team in China introduced cache-to-cache, a communication method that allows large language models to share meaning by transmitting their internal memory rather than final text. The authors argue that text-based exchanges between models create three problems: a bandwidth bottleneck, ambiguity in natural language, and latency from token-by-token generation. By transferring the key value cache directly, models can convey richer intermediate representations and context, avoiding misunderstandings that arise when instructions are phrased only in text.

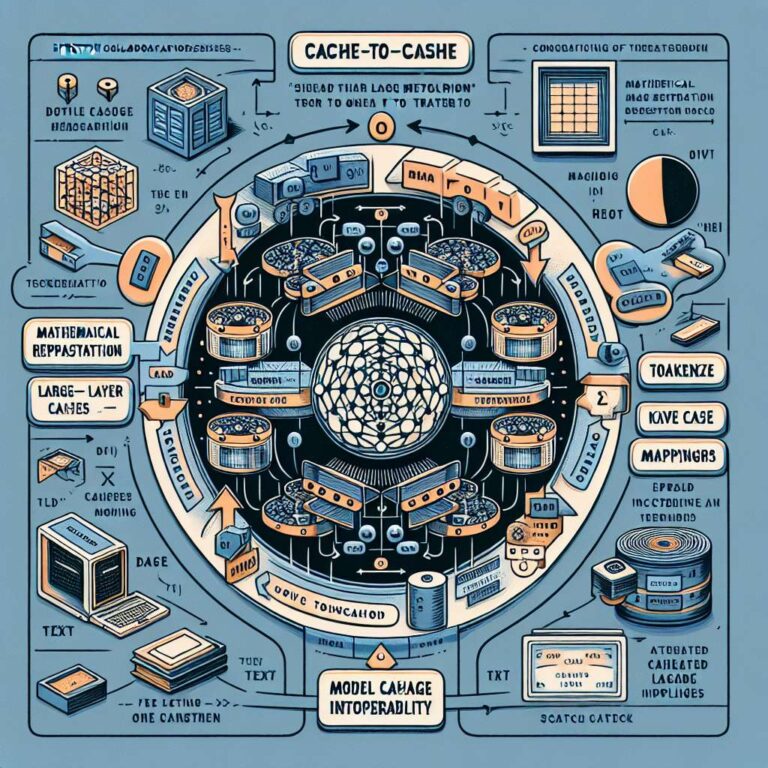

The KV cache functions as a model’s scratchpad, storing mathematical snapshots of tokens and their relationships. In one example, a programmer model asks a writer model to place content correctly in an HTML structure. With text-only instructions, the writer can misinterpret tags and position elements incorrectly. With cache-to-cache, the writer receives the programmer’s internal representation of the page structure and places content precisely. The system fuses a source model’s cache into a target model via a neural component called Cache Fuser, which includes a projection module to align cache formats, a dynamic weighting mechanism to control how much transferred information is used, and an adaptive gate that selects which layers should be enriched. The researchers also align tokenization and layer mappings so different models can synchronize their internal states.

In benchmarks, cache-to-cache outperformed text-based coordination by 3 to 5 percent and improved accuracy by 8.5 to 10.5 percent over single models, while roughly doubling speed. Tests covered combinations of Qwen2.5, Qwen3, Llama 3.2, and Gemma 3 across sizes from 0.6 billion to 14 billion parameters. Larger source models yielded greater gains. Technical analyses showed higher information density in fused caches, indicating that additional knowledge was successfully transferred, and the approach did not enlarge the cache itself.

The authors emphasize efficiency because only the connection module is trained while the source and target models remain unchanged. They highlight potential uses in privacy-sensitive collaboration between cloud and edge devices, pairing with acceleration methods, and integration into multimodal systems spanning language, images, and actions. The team open sourced its implementation and positions cache-to-cache as a practical alternative to text for building faster, more scalable Artificial Intelligence systems.