AWS has announced the deployment of its next-generation Artificial Intelligence server infrastructure, anchored by NVIDIA Blackwell GPUs, designed to deliver unprecedented scalability and performance for machine learning and scientific research workloads. According to AWS, these P6e-GB200 UltraServers are already operational within its third-generation EC2 UltraClusters, connecting the company´s largest data centers into a unified, high-throughput computing fabric. The innovation is said to support customer deployments in multifaceted domains, from drug discovery and business search to software engineering, demonstrating real-world reasoning capabilities at scale.

The new UltraClusters, which integrate the P6e-GB200 servers, boast improved operational efficiencies including up to 40 percent reduction in power usage and more than 80 percent cut in cabling needs—factors that not only streamline deployments but also reduce potential points of failure. At the networking core, AWS leverages its Elastic Fabric Adapter (EFA) with a Scalable Reliable Datagram protocol. EFAv4, when paired with these new instances, offers collective communications up to 18 percent faster for distributed machine learning training compared to previous generations using EFAv3, maintaining robust performance even in the face of network congestion or failure events.

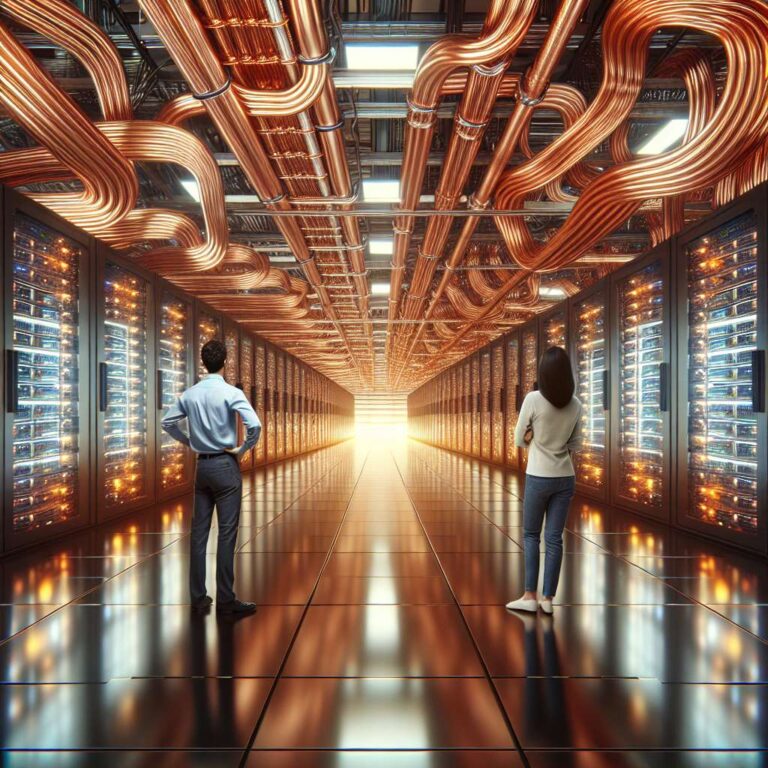

In terms of cooling and density, AWS has chosen to diverge: while P6-B200 instances use traditional air cooling, the higher-density P6e-GB200 UltraServers incorporate liquid cooling, enabling larger NVLink domain architectures vital for modern deep learning. These servers support liquid-to-chip cooling that fits both new and legacy AWS data centers, allowing seamless integration of air-cooled network and storage hardware alongside liquid-cooled accelerators. Further extending accessibility, AWS confirmed that the P6e-GB200 UltraServers will be made available via the NVIDIA DGX Cloud, a unified Artificial Intelligence platform featuring NVIDIA’s software stack. This combination underscores AWS´s commitment to advancing cloud-based artificial intelligence infrastructures for a broad range of enterprise and scientific applications.