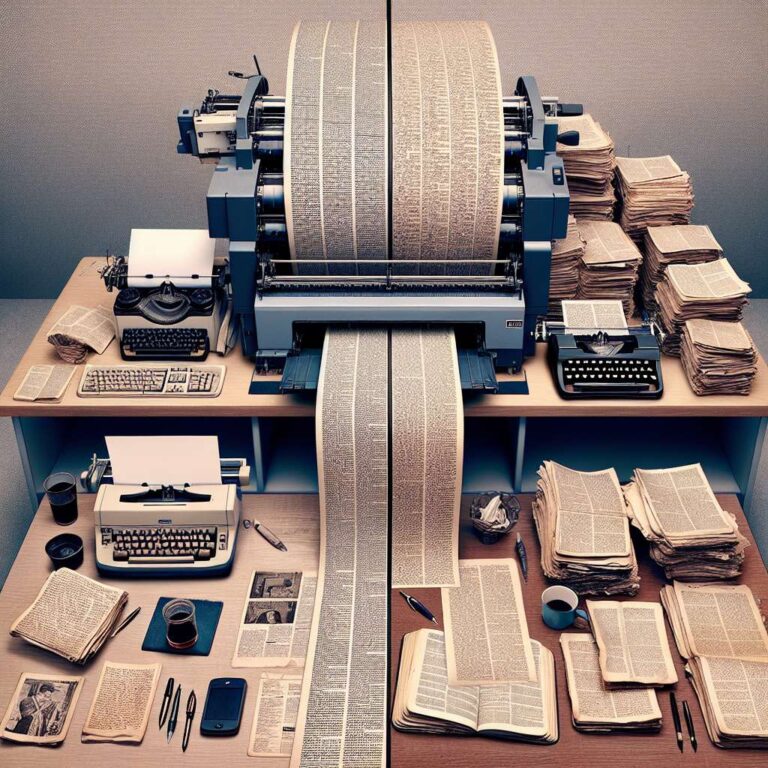

Observers and researchers have identified recurring markers in Artificial Intelligence-generated text – previously rare words, formulaic structures and punctuation habits – that are appearing more often across online writing. ChatGPT has recently hit 800 million weekly users, and 22% of the respondents of our recent survey on generative AI and news in six countries say they use it every week. Common Crawl data indicate a narrow majority of new articles on the web are authored by generative AI, raising questions about how to detect machine-authored prose and how any shift may affect English, a language widely used to build large language models.

Linguists at Florida State University and elsewhere have tried to quantify these shifts. Dr Tom S. Juzek and Dr Zina B. Ward cross-referenced words whose usage spiked in scientific writing with words more frequent in ChatGPT-generated scientific abstracts, identifying a set of focal words such as ‘delve’, ‘resonate’, ‘navigate’ and ‘commendable’. Research by Dr Hiromu Yakura found spikes in words overused by ChatGPT – including ‘delve’, ‘comprehend’, ‘boast’, ‘swift’ and ‘meticulous’ – in conversational podcasts and academic YouTube talks after ChatGPT’s release, suggesting humans may adopt language patterns common in machine output. Experiments that asked Llama 3.2-3B Base and Llama 3.2-3B Instruct to generate halves of abstracts also recovered words likely favoured by LLMs. Researchers argue one contributing factor may be reinforcement learning from human feedback: human rankers who prefer certain phrasing can amplify those preferences during training, and a replication study reported paying testers $15 an hour instead of less than $2 while finding testers favoured AI buzzwords.

The implications for journalism are practical and ethical. Dr Karolina Rudnicka documents LLM tendencies toward concision, avoidance of slang and reduced use of passive voice, and differences between models – for example ChatGPT’s more formal tone versus Gemini’s more accessible choices. Alex Mahadevan of Poynter warns that machine prose can be “empty” and lead to homogenised coverage if relied on excessively, eroding dialects and activist language and flattening distinct reporting voices. Audience data show only a third of respondents would be comfortable with the use of Artificial Intelligence to write article text, versus 55% for spelling help and 41% for headline writing. At the same time, generative tools can help non-native English speakers publish more widely. The consensus among the experts quoted is that Artificial Intelligence appears to accelerate ongoing language changes and that newsrooms should adopt ethics policies and verification practices while monitoring whether a feedback loop produces lasting homogenisation.