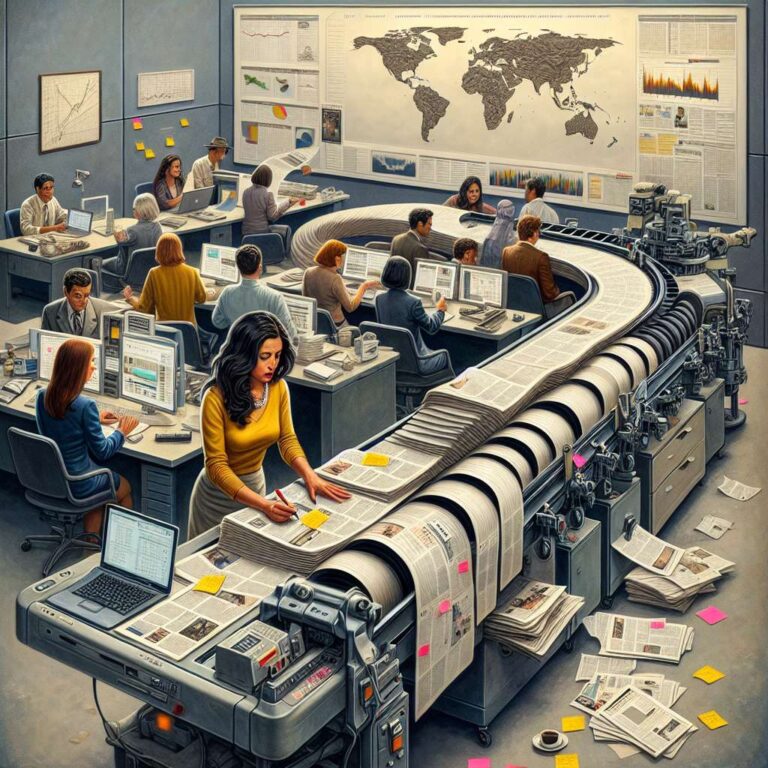

News production is speeding up as Artificial Intelligence shifts from experimental add-on to everyday newsroom infrastructure. The article outlines how tools now assist with everything from sourcing and data triage to drafting and distribution, reframing journalists’ roles around interpretation, accountability, and audience trust. Automation is strongest on structured, data-heavy updates, while human reporters remain essential for analysis, ethics, and storytelling. The roadmap emphasizes transparency about tool use, upskilling reporters, and building systems that preserve accuracy, fairness, and accountability as coverage becomes more personalized and interactive.

Concrete applications are already embedded at major outlets. The Associated Press and Reuters use natural language generation to turn structured inputs such as earnings, sports results, and other metrics into publishable briefs at near-instant speed, freeing reporters for deeper work. On the investigative side, Artificial Intelligence helps surface patterns, anomalies, and connections across large datasets and documents that would be impractical to review manually. The Washington Post’s Heliograf system, for example, supported rapid election coverage by generating quick updates on voting trends. Distribution is also mediated by algorithms that tailor feeds to reader behavior, improving relevance while raising concerns about filter bubbles that limit exposure to diverse perspectives.

The collaboration model centers on augmentation, not substitution. Artificial Intelligence accelerates transcription, translation, data analysis, and first-draft generation, while humans contribute judgment, context, source relationships, and narrative depth. To sustain trust, the article calls for clear labeling of Artificial Intelligence-assisted work, disclosures about data and tools, and human editorial oversight before publication. It recommends bias mitigation practices such as auditing training data, diversifying sources, and deploying bias-detection checks. Guidelines should enshrine human control of final decisions and require Artificial Intelligence-generated text to meet the same fact-checking standards as any other copy.

Preparing the workforce is pivotal. Newsrooms and schools are introducing training on using Artificial Intelligence for research, verification, and data-driven reporting, as well as on the limits and ethical risks of algorithmic systems. Looking ahead, the piece forecasts more real-time report generation, sharper personalization, faster misinformation checks, and expanded use of Artificial Intelligence in video production. It also highlights emerging immersive formats, with Artificial Intelligence enabling augmented and virtual reality experiences that can contextualize complex stories. Expect more interactive delivery through chatbots and voice interfaces, with the enduring caveat that technology should serve public-interest journalism rather than define it.