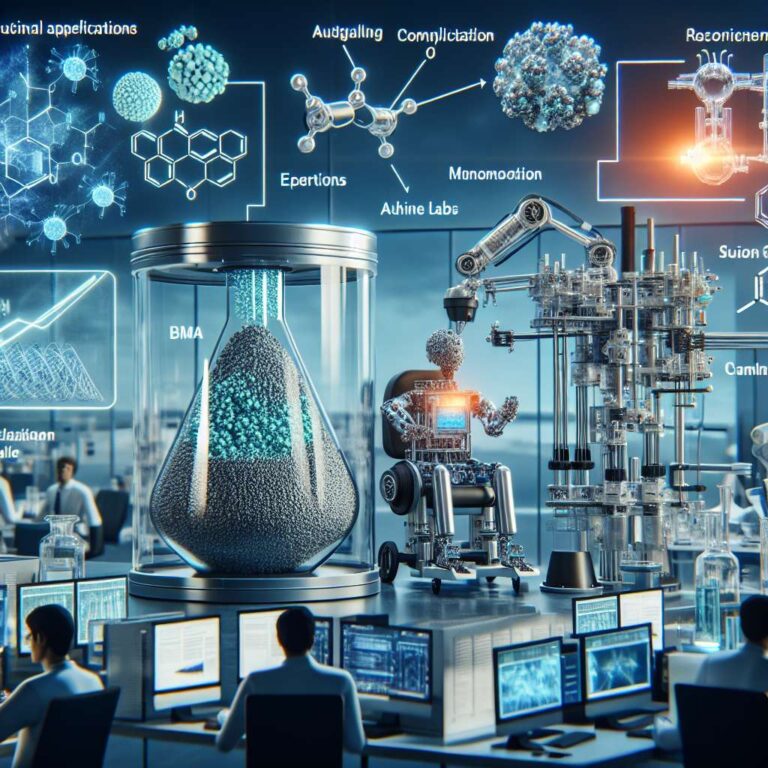

Inside Lila Sciences’ Cambridge lab, a sputtering instrument runs experiments under the direction of an artificial intelligence agent trained on scientific literature and data, which selects element combinations to form thin-film samples that are later tested and fed into another artificial intelligence system to propose new experiments. Human scientists still supervise and approve each step, but Lila sees this setup as an early version of autonomous labs that could dramatically cut the time and cost of discovering useful new materials. The company, flush with hundreds of millions of dollars in funding and newly minted as a unicorn, is part of a broader push to use artificial intelligence directed experimentation to pursue what it calls scientific superintelligence.

Materials science urgently needs a boost, as society demands better batteries, carbon capture materials, green-fuel catalysts, magnets, semiconductors, and potentially transformative technologies like higher-temperature superconductors and improved artificial intelligence hardware. Despite scientific advances such as perovskite solar cells, graphene transistors, metal-organic frameworks, and quantum dots, the field has produced relatively few major commercial wins in recent decades, in part because developing a new material typically takes 20 years or more and costs hundreds of millions of dollars. Traditional computational modeling, now accelerated by deep learning efforts at Google DeepMind, Meta, and Microsoft, has expanded the catalog of theoretically stable structures, but has repeatedly run into the same limitation: simulations cannot replace the need to synthesize and test materials in the lab.

DeepMind’s 2023 claim that deep learning had discovered “millions of new materials,” including 380,000 crystals deemed “the most stable,” drew intense attention from the artificial intelligence community but skepticism from many materials scientists, who argued the work largely produced proposed compounds simulated at absolute zero rather than demonstrated materials with real-world functionality. Critics at the University of California, Santa Barbara reported “scant evidence for compounds that fulfill the trifecta of novelty, credibility, and utility” and suggested that many of the supposed materials were trivial variants of known structures or idealized versions of already disordered crystals. The controversy underscored how hard it remains to bridge the gap between atomistic simulations and properties that depend on microstructure, finite temperature behavior, and poorly understood phenomena such as high-temperature superconductivity or complex catalysis.

In response, a new generation of startups is explicitly combining computation with aggressive automation. Periodic Labs, cofounded by DeepMind alum Ekin Dogus Cubuk and former OpenAI researcher Liam Fedus, is building a strategy around automated synthesis and large language models that digest scientific literature, propose recipes and conditions, interpret test data, and iterate designs, aiming at targets like quantum materials and, ultimately, a room-temperature superconductor. At Lawrence Berkeley National Laboratory, the A-Lab has demonstrated a fully automated inorganic synthesis line that reported using robotics and artificial intelligence to create and test 41 novel materials, including some from the DeepMind database, though its claims of novelty and analytical rigor have been debated. Principal scientist Gerbrand Ceder envisions artificial intelligence agents that capture “diffused” expert knowledge, read a flood of papers, and make strategic experimental decisions, coordinated by higher-level orchestrator models such as those Radical AI is now building into its self-driving labs.

Despite these advances, the field has yet to produce a clear “AlphaGo moment” or an AlphaFold-like triumph; there is still no flagship example where artificial intelligence has rapidly delivered a commercially important new material. Venture investor Susan Schofer, who once worked at an early high-throughput materials startup, says she now looks for concrete signs that companies are already “finding something new, that’s different,” along with business models that capture value by specifying, scaling, and selling materials in partnership with incumbent manufacturers. Materials companies remain wary after past disappointments with promises that more computation, combinatorial chemistry, or synthetic biology would revolutionize their pipelines, and a 2024 paper from an MIT economics student claiming that a large corporate lab had quietly used artificial intelligence to invent numerous materials was later disavowed by the university as unreliable. Yet activity is accelerating: Lila is moving into a larger lab, Periodic Labs is shifting from manually synthesized, artificial intelligence guided experiments toward robotics, and Radical AI reports an almost fully autonomous facility. Founders describe an influx of funding and a renewed sense of purpose for a field long overshadowed by drug discovery, even as they acknowledge that turning artificial intelligence tools and autonomous labs into real, adopted materials will require not just scientific breakthroughs but also persuading a conservative industry to embrace a new way of doing research and development.