At CES 2026, AMD chair and CEO Dr Lisa Su used the show’s opening keynote to describe how the company’s expanding portfolio of artificial intelligence products and cross-industry collaborations is moving artificial intelligence from concept to practical deployments. The presentation highlighted advances from the data center to the edge, with partners such as OpenAI, Luma AI, Liquid AI, World Labs, Blue Origin, Generative Bionics, AstraZeneca, Absci and Illumina explaining how AMD hardware underpins their artificial intelligence work. Su framed the industry’s rapid artificial intelligence adoption as the beginning of a yotta-scale computing era, arguing that AMD aims to provide the compute foundation through end-to-end technology, open platforms and deep co-innovation across the ecosystem.

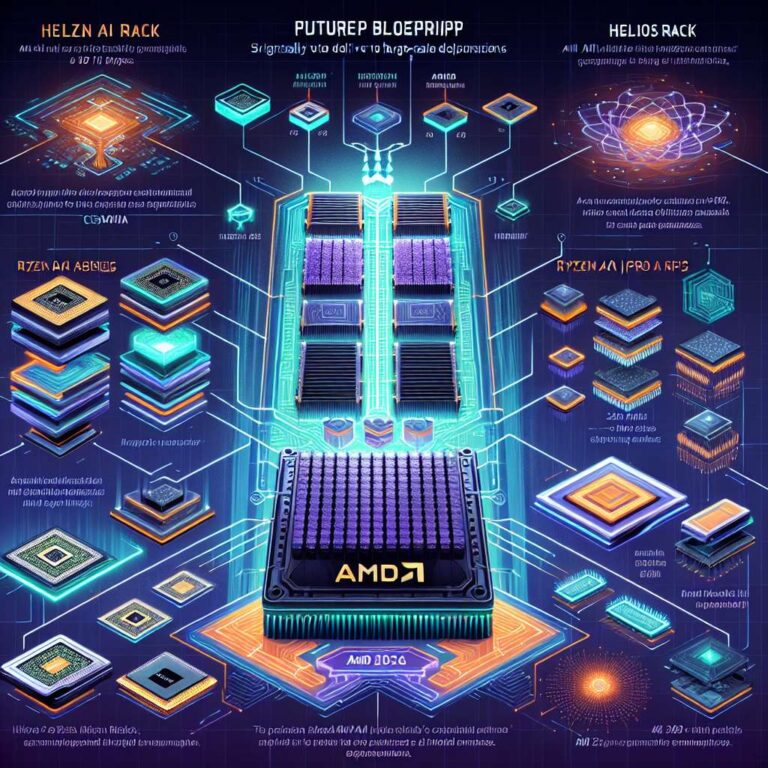

Su noted that compute infrastructure for artificial intelligence is expanding from today’s 100 zettaflops of global compute capacity to a projected 10+ yottaflops in the next five years. AMD’s answer at rack scale is the Helios platform, described as a blueprint for yotta-scale infrastructure that delivers up to 3 AI exaflops of performance in a single rack and is optimized for bandwidth, energy efficiency and trillion-parameter training. Helios combines AMD Instinct MI455X accelerators, AMD EPYC “Venice” CPUs and AMD Pensando “Vulcano” NICs, all tied together by the open AMD ROCm software ecosystem. AMD used CES to give an early look at Helios, fully unveil the AMD Instinct MI400 Series accelerator lineup and preview the next-generation MI500 Series GPUs, positioning these products for both large-scale training and sovereign artificial intelligence systems.

The newest MI400 part, the AMD Instinct MI440X GPU, targets on-premises enterprise artificial intelligence with an eight-GPU form factor for training, fine-tuning and inference inside existing infrastructure, and it builds on the previously announced AMD Instinct MI430X GPUs that are intended for high-precision scientific, high-performance computing and sovereign artificial intelligence workloads, including systems such as Discovery at Oak Ridge National Laboratory and the Alice Recoque exascale supercomputer. Looking ahead, AMD disclosed that AMD Instinct MI500 GPUs, planned for launch in 2027, are on track to deliver up to a 1 000x increase in AI performance compared to the AMD Instinct MI300X GPUs introduced in 2023, and will be based on AMD CDNA 6 architecture, 2nm process technology and HBM4E memory. On the client side, AMD introduced Ryzen AI 400 Series and Ryzen AI PRO 400 Series platforms with a 60 TOPS NPU and full AMD ROCm support, as well as Ryzen AI Max+ 392 and Ryzen AI Max+ 388 platforms that support models of up to 128-billion-parameters with 128GB unified memory, and it announced the Ryzen AI Halo Developer Platform, expected in Q2 2026, to deliver leadership tokens-per-second-per-dollar for artificial intelligence developers. Extending to the edge, AMD also launched Ryzen AI Embedded processors, including P100 and X100 Series parts, aimed at automotive, healthcare and autonomous systems that need high-performance, efficient artificial intelligence compute in constrained embedded designs.