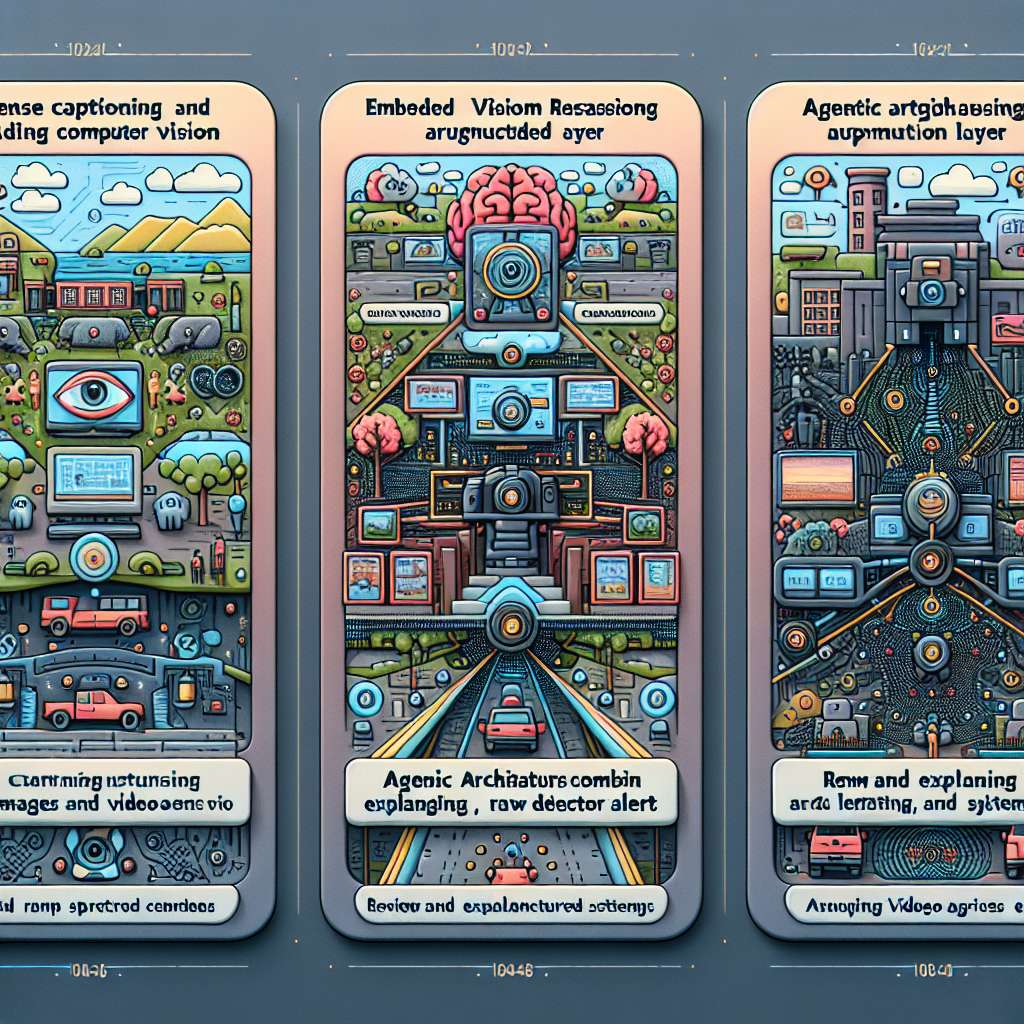

Three ways to bring agentic Artificial Intelligence to computer vision

Agentic Artificial Intelligence built on vision language models can augment legacy computer vision systems by generating dense captions, enriching alerts with contextual reasoning and applying complex-query summarization across long video archives.

Google DeepMind trains SIMA 2 with Gemini inside Goat Simulator 3

Google DeepMind upgraded its SIMA agent with Gemini to build SIMA 2, an Artificial Intelligence agent that navigates and learns across commercial games including Goat Simulator 3 and aims to inform future robot capabilities.

OpenAI builds transparent weight-sparse transformer to reveal how Artificial Intelligence models work

OpenAI has built an experimental, more transparent large language model that helps researchers trace how Artificial Intelligence systems compute. The model is smaller and slower than commercial products but offers clearer internal mechanisms for study.

One thing enterprise Artificial Intelligence projects need to succeed? Community.

Discover how leveraging an intelligent, community-driven knowledge layer grounds probabilistic tools, prevents Artificial Intelligence hallucination, and helps validate high-quality code.

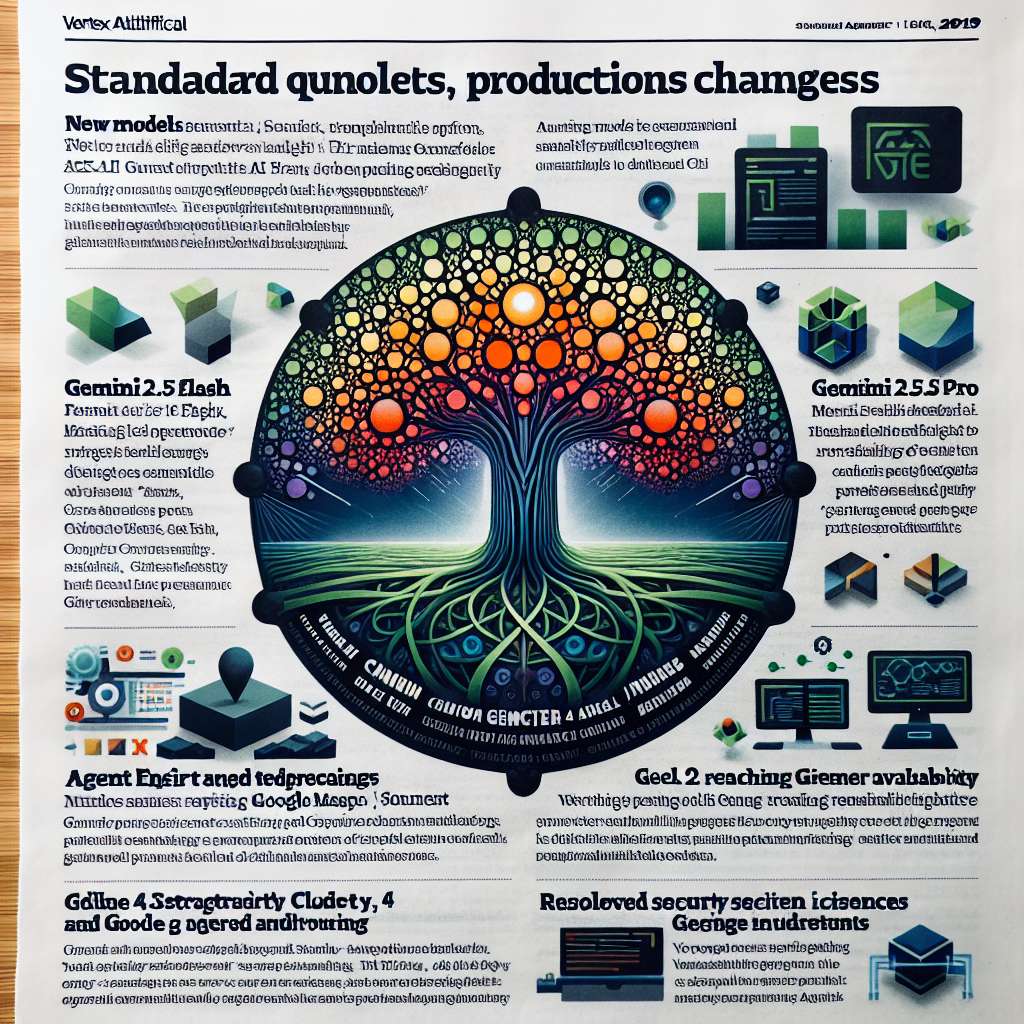

Vertex Artificial Intelligence release notes

A chronological log of production updates for Vertex Artificial Intelligence on Google Cloud, covering new models, platform features, deprecations, security notices, and tooling changes. The page is maintained as the authoritative source for feature launches and lifecycle changes through November 13, 2025.

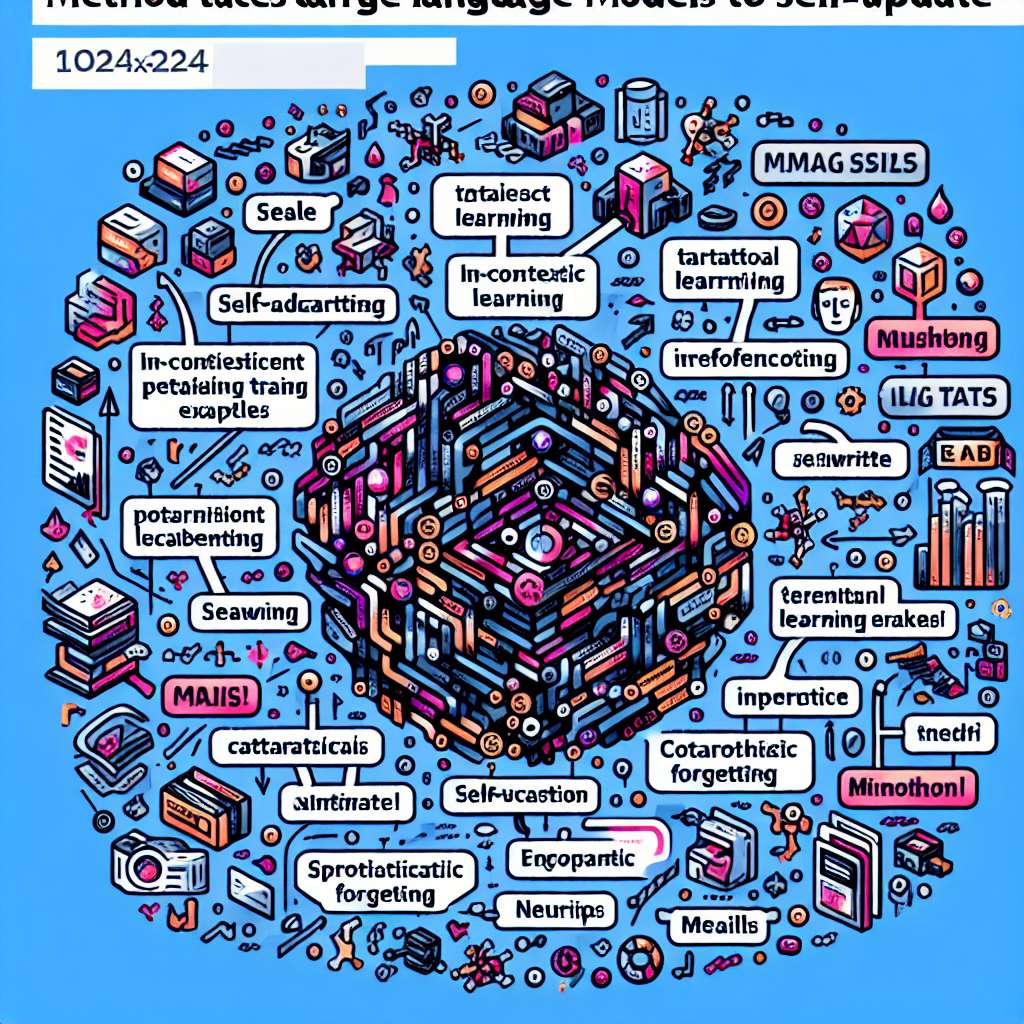

Teaching large language models how to absorb new knowledge

Researchers at MIT have developed a self-adapting framework that lets large language models permanently internalize new information by generating and learning from their own self-edits. The method could help Artificial Intelligence agents update between conversations and adapt to changing tasks.

OpenAI’s new LLM exposes the secrets of how artificial intelligence really works

OpenAI built an experimental, weight-sparse LLM designed to be far easier to inspect than typical models. The work aims to reveal the internal mechanisms behind hallucinations and other failures, and to improve how much we can trust artificial intelligence.

Us2.ai advances cardiac imaging with multimodal Artificial Intelligence

Us2.ai highlights studies and partnerships showing how Artificial Intelligence applied to echocardiography can surface hidden cardiac amyloidosis and support broader structural heart disease workflows.

MMCTAgent enables multimodal reasoning over large video and image collections

MMCTAgent enables dynamic multimodal reasoning with iterative planning and reflection. Built on Microsoft’s AutoGen framework, it combines language, vision, and temporal understanding for complex tasks such as long video and image analysis.

NVIDIA sweeps MLPerf training v5.1 for artificial intelligence

NVIDIA swept all seven tests in MLPerf Training v5.1, posting the fastest training times across large language models, image generation, recommender systems, computer vision and graph neural networks. The company was the only platform to submit results on every test, highlighting its GPUs and CUDA software stack.