Myrtle.ai has announced the release of its VOLLO inference accelerator on the NT400D1x series of SmartNICs by Napatech, bringing unprecedented machine learning inference compute latencies measured at less than one microsecond. This push toward minimal latency enables real-time and near-network inference capabilities directly within SmartNIC hardware, a critical step for industries demanding ultra-fast responsiveness.

VOLLO stands out by supporting a broad spectrum of machine learning models. Users can now deploy long short-term memory (LSTM), convolutional neural networks (CNN), multilayer perceptrons (MLP), as well as ensemble methods such as random forests and gradient boosting decision trees, all on the VOLLO platform. This versatility makes it well-suited for advanced analytics and predictive modeling at the edge, eliminating bottlenecks caused by routing data to centralized servers for inference.

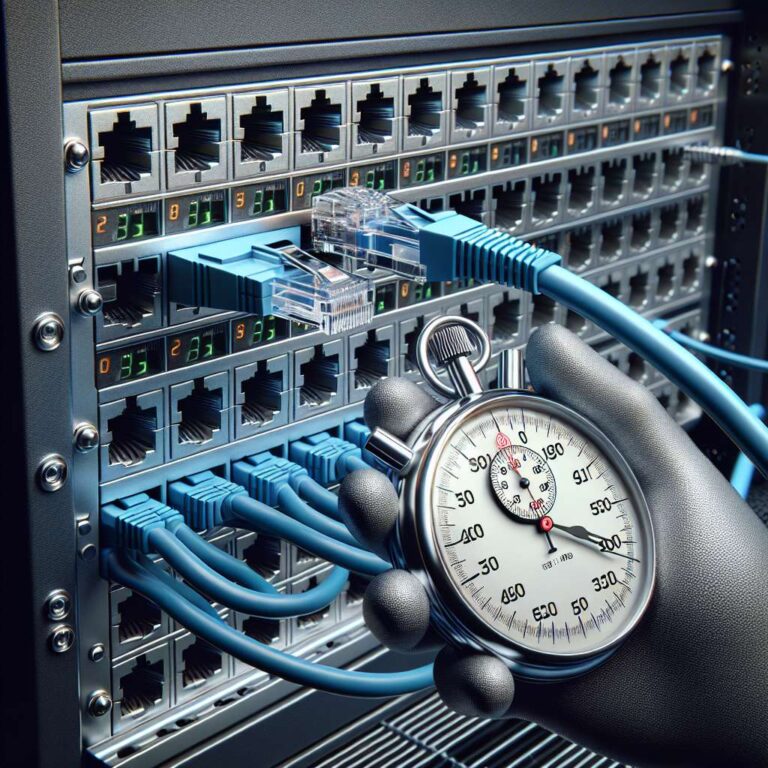

The technology is tailored for applications that rely on real-time computation, including financial trading where microseconds can decide profits; wireless telecommunications where rapid data processing sustains network integrity; cybersecurity applications requiring instant threat detection; and network management systems needing swift operational adjustments. By offering extremely low latency inference, Myrtle.ai and Napatech are empowering organizations to achieve improvements in security, safety, operational efficiency, and cost, marking a significant development in edge-based machine learning acceleration.