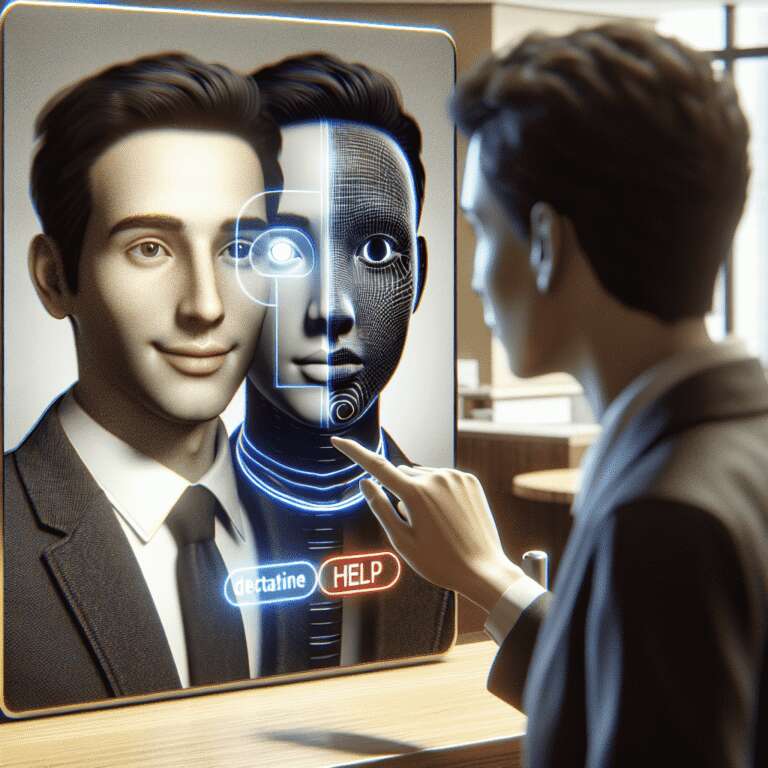

Artificial intelligence-powered chatbots, once a familiar fixture as website popups, have rapidly expanded in capability and prevalence thanks to the rise of generative Artificial Intelligence systems. Businesses now widely incorporate chatbots into customer service, sales, and outreach, aiming to enhance user experience and streamline operations. However, the design of these digital agents—their level of human-likeness and interface features—can significantly affect whether consumers trust and engage with them.

Scott Schanke, an assistant professor at UWM’s Lubar College of Business, leads research on how the nuances of chatbot design influence public-facing business interactions. In a 2021 study, Schanke and his team collaborated with a secondhand retailer by building chatbots with varying human-like traits, such as telling jokes or using names. While more anthropomorphic bots increased conversion rates, they also led customers to push harder for better deals. In contrast, straightforward, bot-like agents were met with fewer negotiations. In emotionally laden contexts like charity donation, overly human chatbots were less effective, as high emotional cues combined with anthropomorphism deterred potential donors. Logical, less human bots, in these scenarios, produced more positive outcomes.

Schanke’s research also highlights the emerging influence of voice cloning technologies, which can convincingly mimic individual voices with minimal audio input. While these audio deepfakes are sometimes used playfully online, organizations are exploring their potential for enhancing customer service—though not without risks. In experimental studies, participants were more likely to trust bots speaking in their own cloned voice, even when warned about deception, raising concerns about manipulation and fraud. The study found that even explicit disclosure of a bot’s identity did not significantly erode trust. These findings underscore the urgent need for forward-looking regulation and awareness as generative Artificial Intelligence technology grows, to safeguard consumers and inform effective, ethical chatbot deployment strategies.