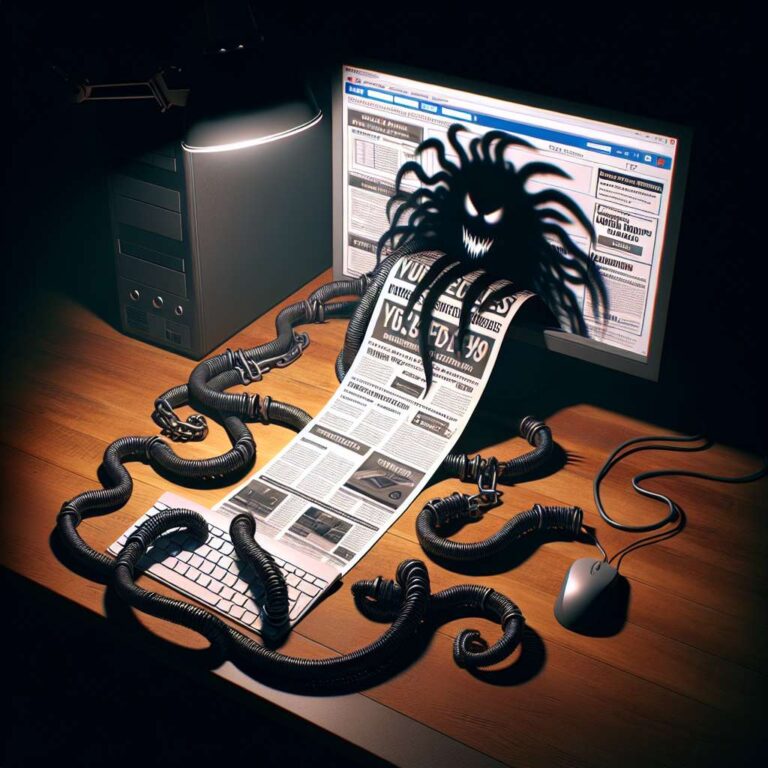

Amid a rapid rise in enthusiasm for Artificial Intelligence-based video creation tools, cybercriminal groups are seizing on the trend to distribute malware through fraudulent ads on Facebook. According to Google’s threat intelligence arm Mandiant, these attackers are crafting advertisements that appear to promote reputable video generators such as Canva’s Dream Lab, Luma AI, and Kling AI. Instead of leading to authentic services, these ads redirect users to counterfeit websites rigged with malicious software.

The malicious campaign, identified as UNC6032 and attributed to threat actors from Vietnam, has been operating since mid-2024 and relies on an evolving network of more than 30 fake domains. The fake sites distribute a range of threats, including Python-based information stealers and remote access backdoors, allowing the attackers to harvest sensitive information. Tactics include regularly switching domain names and uploading new ads on a near-daily cycle, helping the group skirt Meta’s detection and takedown efforts. Although the majority of incidents occur on Facebook, some activity has spread to LinkedIn. Internal figures obtained by Mandiant indicate the campaign’s massive reach: in the European Union alone, just 120 of these ads were delivered to over 2.3 million users.

The group’s ultimate objective goes far beyond simple ad clicks; harvested data includes login credentials, credit cards, browser cookies, and Facebook account details, all intended for secondary exploitation. While Meta has already removed many fraudulent ads flagged since 2024, the persistence and agility of UNC6032 pose an ongoing challenge. Security researchers urge users to avoid clicking on Artificial Intelligence tool ads within social media platforms; instead, they recommend searching for the official website of the desired tool directly to significantly reduce the risk of malware exposure and data theft.