The landscape of machine learning research has experienced a notable shift, where traditional, mathematically driven approaches deliver marginal improvements compared to compute-intensive strategies that leverage vast datasets. Mathematics, once central to providing insights in machine learning, now finds itself grappling to keep pace with empirical advances brought forth by engineering-driven methods. This evolution reflects the enduring truth of the ‘Bitter Lesson’—that scaled up computation can often surpass theoretical precision.

Despite rumors of its decline, mathematics is not becoming obsolete in machine learning; instead, its role is evolving. Previously focused on theoretical performance guarantees, mathematics is now being used more for understanding the resulting behavior of models after training. This paradigmatic shift allows for a broader integration with interdisciplinary fields such as biology and the social sciences, offering researchers a richer tapestry of insights into the implications of machine learning systems on real-world tasks and society.

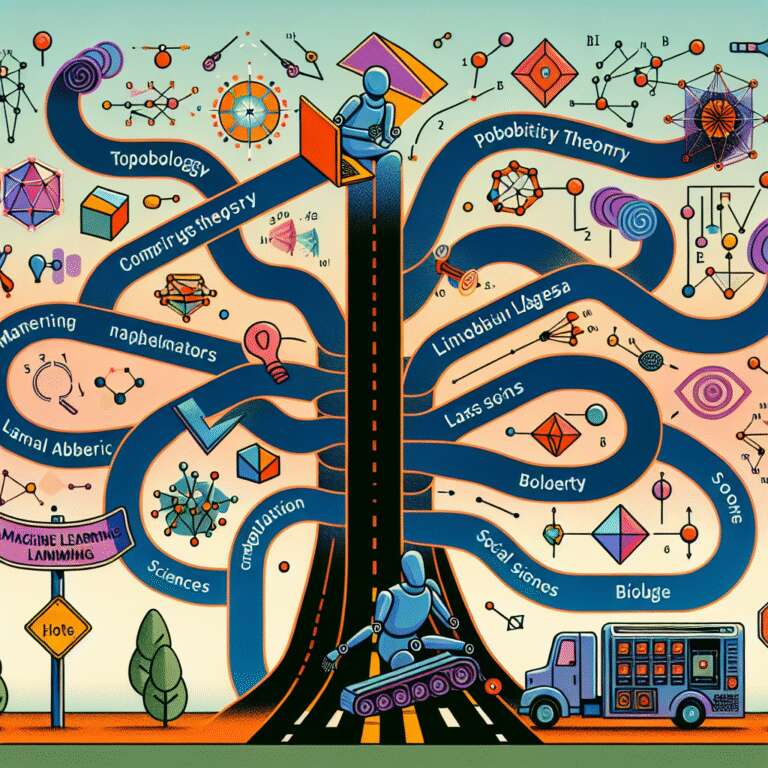

Furthermore, the shift towards scale has diversified the mathematical tools at hand, with pure fields such as topology and geometry joining probability theory and linear algebra. These areas offer new methods to tackle the complexities of deep learning, providing tools for architectural design and understanding. As machine learning models continue to consume and process data, they pave the way for mathematics to explore and formalize principles that underlie various datasets, ultimately serving as a bridge to previously inaccessible scientific domains.