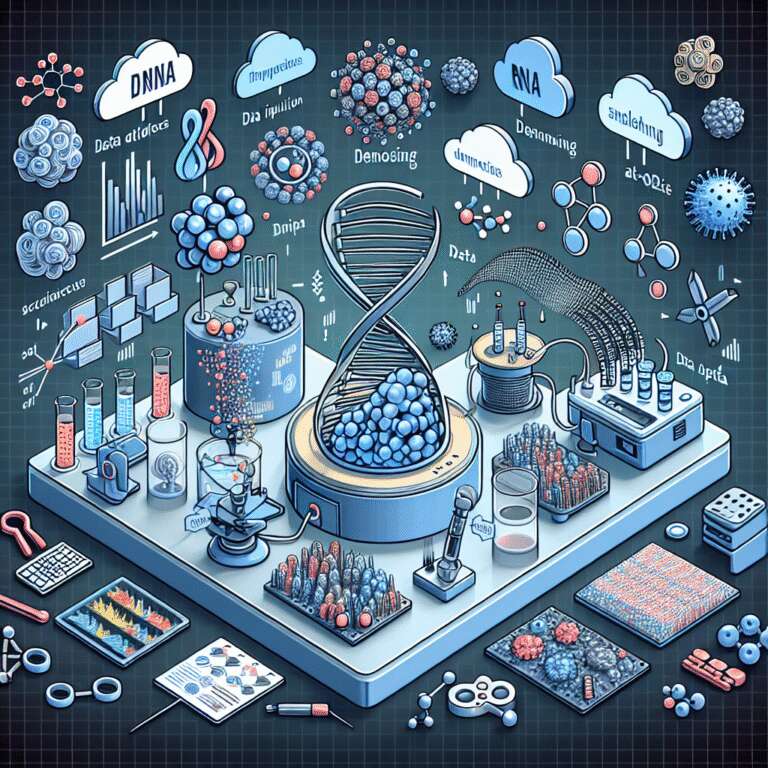

The article delves into how deep learning is revolutionizing single-cell sequencing technologies, which offer unprecedented insights into cellular diversity. Single-cell sequencing allows for DNA and RNA analysis at an individual cell level, crucial for understanding cellular heterogeneity. Introduced as the ‘Method of the Year’ by Nature in 2013, advancements have continued with the integration of deep learning techniques, proving indispensable for managing the complexity and volume of data produced during sequencing.

The authors detail various applications of deep learning in this field, such as imputation and denoising of scRNA-seq data, which addresses technical challenges like data sparsity and noise. They also highlight the significance of architectures like autoencoders for reducing dimensionality and identifying subpopulations of cells. Moreover, they underscore the importance of batch effect removal and multi-omics data integration, which are critical for reconciling data variations and drawing comprehensive biological insights from multimodal datasets.

Despite these advancements, the article notes several challenges in the application of deep learning to single-cell sequencing. It points out the need for robust benchmarking to validate models’ performance across diverse datasets. Additionally, the integration of multi-omics data presents complexities due to noise and the diversity of cell information. Nonetheless, overcoming these hurdles could unlock more profound understandings of cellular functions, with implications for improved healthcare and disease cure strategies.