Agentic AI has become a popular label for systems that seem to take initiative: they monitor data, decide what to do next, and act without waiting for a human prompt. The term can sound grander than the reality. These systems are an important step for automation, but they are not artificial general intelligence (AGI). They are engineered loops built around modern language models and other components.

This article explains what agentic AI actually is, how it works, where it helps, where it fails, and how to design it responsibly.

What “agentic” really means

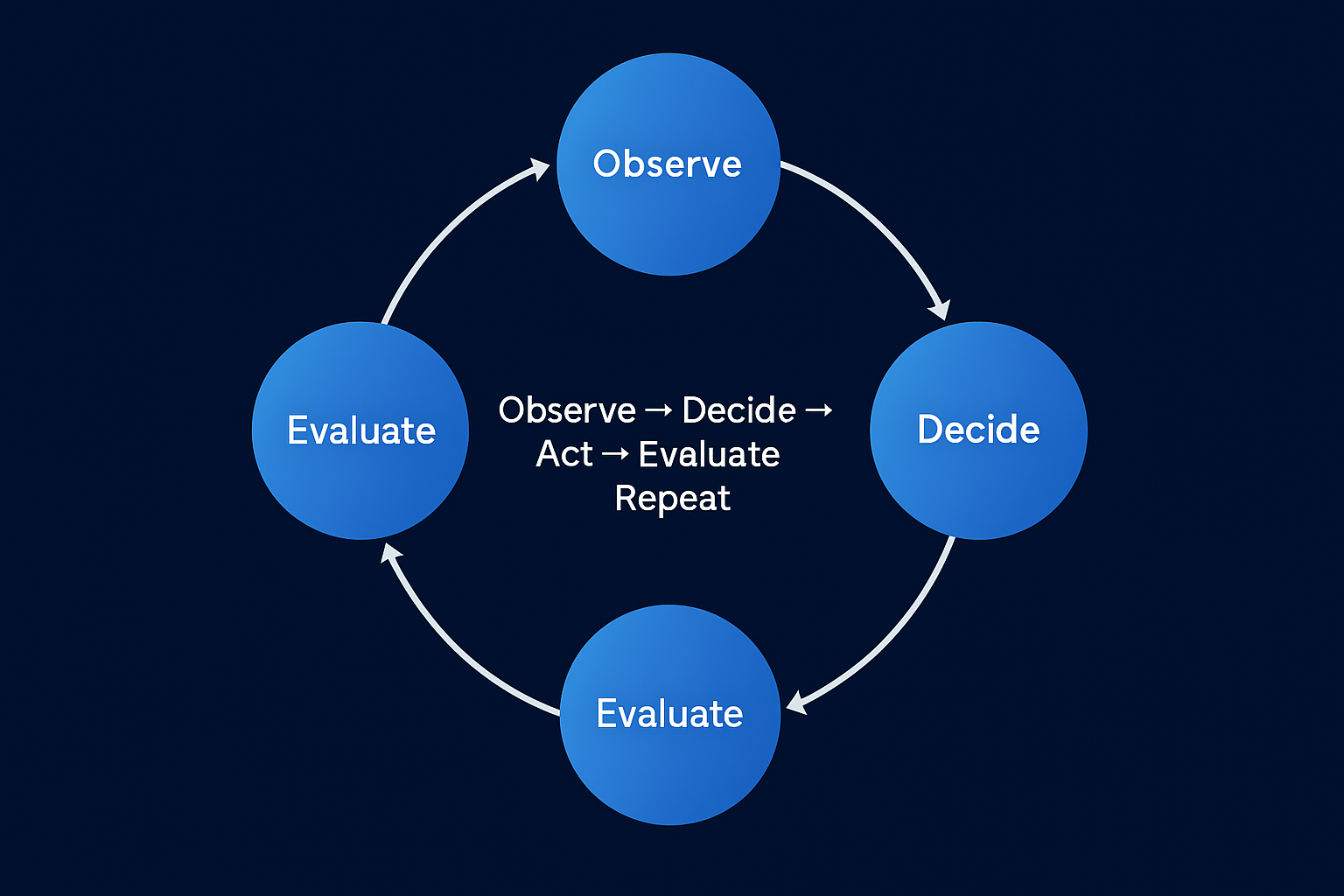

At the core of an agentic system is a repeatable loop:

- Observe: read the environment (APIs (software connectors), files, databases, web pages, emails, sensors).

- Decide: plan the next step using an LLM or other decision logic.

- Act: call tools or services to do something.

- Evaluate: check outcomes, log results, update memory, and adjust the plan.

The “agency” comes from surrounding a reactive model with goals, memory, and tool access so it can keep working without constant instructions. There’s no consciousness involved – just well-designed scaffolding.

How agentic AI actually works

A practical agentic stack usually includes:

1) Goals and policies

- A clear objective (for example, “publish today’s AI news before 9 a.m.”).

- Guardrails and policies (allowed tools, spending limits, escalation rules).

2) Triggers and scheduling

- Cron jobs or event listeners (webhooks, message queues, file watchers).

- Priority rules so urgent events pre-empt routine tasks.

3) Memory and state

- Short-term state (current task, intermediate outputs).

- Long-term memory (logs, embeddings, key/value stores).

- Retrieval for context, often via retrieval-augmented generation (RAG, a way to fetch relevant information before deciding).

4) Reasoning and planning

- An LLM that decomposes tasks and reasons through steps (methods like ReAct or Chain-of-Thought, which break work into smaller steps).

- Simple planners for predictable flows; more advanced ones for multi-step, branching work.

5) Tool use and actions

- Function-calling to scripts and APIs (email, spreadsheets, CRM, CMS, cloud services) – in plain terms, the model requests a specific tool to run.

- Permissions and quotas for each tool, with audit logs.

6) Feedback, evaluation, and recovery

- Automatic checks (for example, did the post publish? was the price updated?).

- Error handling, retries, and human-in-the-loop escalation.

- Metrics: cost, latency, success rates, drift.

Together, these parts make the system seem proactive, but it still works inside human-set goals and boundaries.

A concrete example: a daily AI news agent

Goal: Publish a daily roundup of AI news.

Environment and tools: web search/scraping, summarizer, image generation, and WordPress API.

Control layer: n8n orchestrates the workflow and handles scheduling and retries.

Memory: logs of previous posts and a list of recent URLs to avoid duplicates.

Process:

- Observe

- Scheduled trigger fires each morning.

- The agent queries known sources and RSS feeds, then normalizes titles and URLs.

- Deduplicate

- First pass: fuzzy matching with Levenshtein distance in Node.js.

- Second pass: semantic similarity check using a compact LLM (for example, GPT-5 nano) to catch near-duplicates with different headlines.

- Decide

- Rank stories by relevance, novelty, and source quality.

- Build a coverage plan: which items to include, which to discard, and the order of presentation.

- Act

- Generate concise summaries with citations.

- Create a header image.

- Post to WordPress via API with categories, tags, and scheduled time.

- Evaluate

- Verify the post status is “published.”

- Update the memory store with the day’s URLs and titles to avoid future duplicates.

- Emit metrics for run time, tokens, and success/failure.

This is an agent. It continuously executes toward a goal with minimal supervision. It’s not imaginative or sentient, but it’s dependable and fast.

Minimal Pseudocode

while schedule.triggered():

sources = collect_sources()

candidates = score_rank(sources)

unique = dedupe_levenshtein(candidates)

unique = dedupe_semantic(unique) # compact LLM check

plan = select_top(unique, policy)

assets = summarize_and_image(plan)

publish_to_wordpress(assets)

log_and_update_memory(plan, assets)

Where agentic AI shines

Agentic systems are most valuable where the business needs constant awareness and fast, low-risk decisions. They monitor live data, react to changes, and carry out routine actions that previously stalled in queues or inboxes. In practice, that means turning slow handoffs into immediate execution: refreshing dashboards, enriching data, updating product content, publishing scheduled items, or notifying teams when approvals are required.

The business impact is reduced latency from insight to action and a measurable lift in operational consistency. When repetitive steps run themselves, people spend time on higher-leverage work instead of process babysitting.

Why this still isn’t AGI

Agentic systems don’t understand context like people do. They predict likely outputs and follow instructions inside guardrails. Their “learning” is remembering prior outcomes and applying rules more efficiently – they don’t form new goals or challenge assumptions. That limitation is also what makes them dependable. Treat them as scalable process operators that improve speed and quality, not as independent thinkers. The term “agent” describes how they work, not what they are.

Design tips for robust agents

Effective agents start narrow. Give each one a measurable objective, clear ownership, and tight permissions. Use event-driven triggers to avoid constant polling and unnecessary cost. Log every input, output, and tool call to create a full audit trail for compliance and debugging.

Keep a human in the loop for high-impact changes or low-confidence results, and cascade models so smaller ones handle routine cases while larger models – or people – decide on edge cases. Version prompts and workflows so behavior is reproducible and issues are easy to roll back. In short: manage agents like employees with defined responsibilities and oversight.

What’s next

The next wave is about systems. Expect stronger long-term memory so agents can track multi-week objectives, supervisor patterns that coordinate specialized agents, and automatic evaluators that check work before it reaches production, and governance layers that standardize access, cost control, and traceability.

None of this turns agents into AGI, but it will make automation far more reliable and auditable – exactly what operations teams need to trust it at scale.

Bottom line

Agentic AI is a practical shift from prompt-and-reply tools to goal-driven, continuously operating systems. By combining scheduling, memory, reasoning, and tool use, agents automate multi-step work in a way that feels proactive while staying within clear human-defined boundaries. Design them with guardrails and measurement, and they’ll quietly shorten cycles, cut error rates, and free people to focus on decisions that truly move the business.