Orders for Artificial Intelligence gear are pouring in across the industry, not just at chip giant Nvidia. The article reports that AMD has secured deals with Meta, Microsoft, OpenAI and Oracle. Cisco already has Not stated worth of Artificial Intelligence orders this year, and Broadcom revealed it landed a Not stated contract for racks built on its XPU chips. The surge in compute and infrastructure spend is colliding with another constraint data centers are already wrestling with: power, cooling and networking demands.

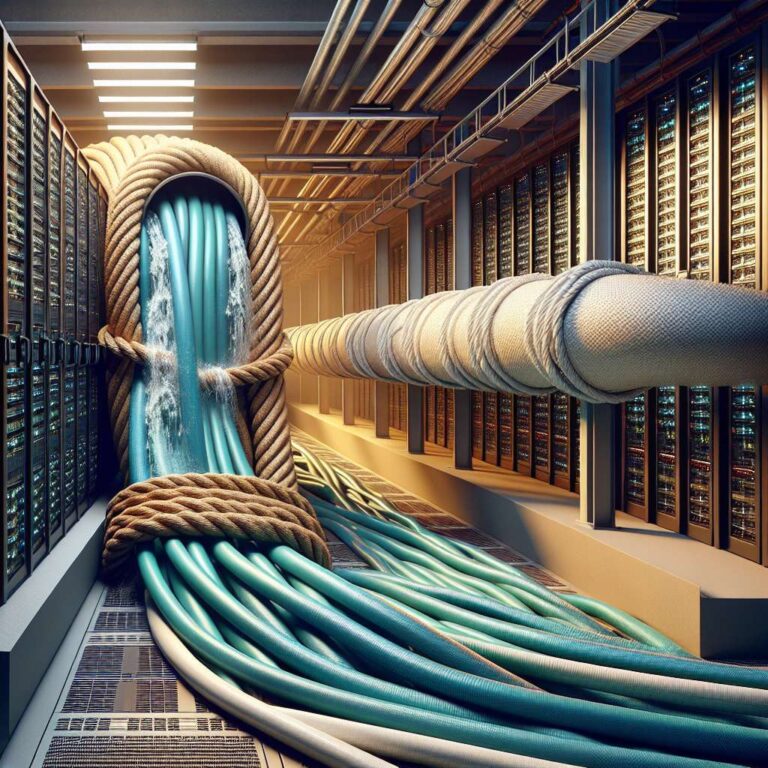

Beyond those limits, memory bandwidth is emerging as a central bottleneck for scaling Artificial Intelligence. Experts quoted in the article say GPUs are often restricted by the need to connect to external memory over interconnects that slow data movement, and CPUs also face the same wall. J. Gold Associates founder Jack Gold is quoted saying that bringing memory closer and faster to GPUs yields major performance improvements. ScaleFlux VP of products JB Baker described the mismatch as a gap between how many calculations chips can perform and how much memory bandwidth exists to feed them, comparing it to a massive water source drained through a garden hose.

There is no single cure, but steps are underway. The article notes compute express link, solid state drive advances and vendor efforts such as Nvidia´s NVLink and Storage-Next initiatives as partial solutions. It also names other players, including Intel spin-off Cornelis, working on speeding memory access. Baker argued that on-premises adopters must rebalance capital expenditures to invest in memory, storage and networking as well as GPUs, warning that underinvesting in those areas will waste GPU spend and burn power with idle processors.