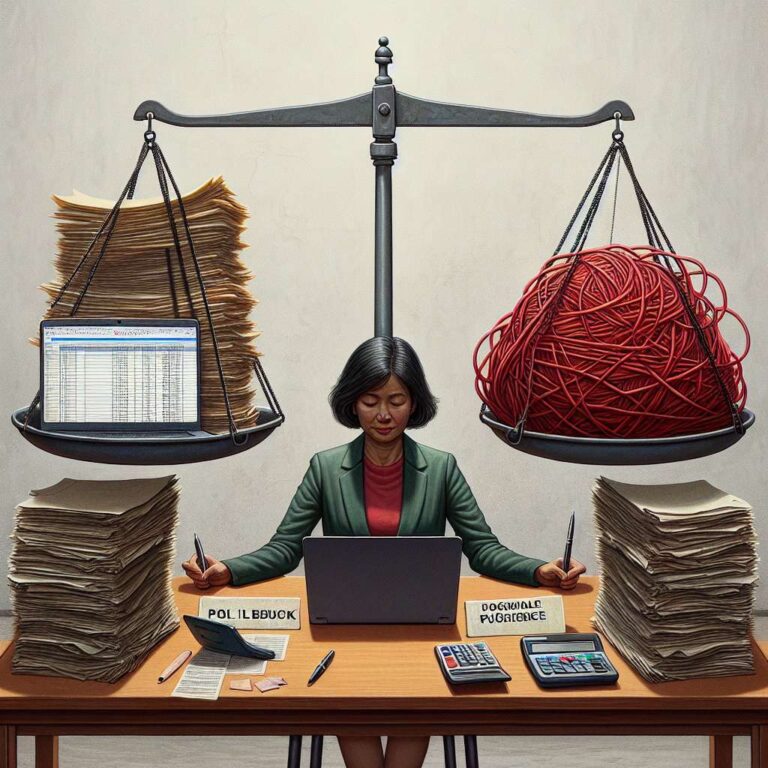

In a bold initiative that captivated digital rights advocates and algorithmic fairness experts alike, Amsterdam embarked on a multi-year experiment to use an algorithm, dubbed ´Smart Check,´ to identify welfare application fraud. City officials, motivated by a decades-old culture of distrust in the Dutch welfare system and a desire to address analog biases, believed that modern tools of responsible artificial intelligence—transparency, audits, and exclusion of sensitive demographic factors—could deliver a more just, efficient process than human caseworkers. The stakes were enormous: at least €500,000 and significant public trust hinged on whether a model trained on thousands of historical cases could fairly automate oversight in welfare determinations, while avoiding discrimination and upholding citizens´ rights.

Yet despite rigorous bias testing, external consultation, and reweighting of the training data, Smart Check produced unsettling results. Initial runs of the algorithm showed sharp bias against migrants and men, which developers corrected through iterative approaches. However, live pilot results revealed new patterns of discrimination—this time, Dutch nationals and women were wrongfully flagged more often, along with applicants with children. Internal analysis also exposed that the human caseworkers´ manual process had its own biases, sometimes opposite in direction to the algorithm’s. Efforts to align the model with mathematical definitions of fairness only highlighted how difficult it is to create standards that don’t produce new forms of inequity or obscure existing ones.

With nearly 1,600 welfare applications subjected to the algorithm’s scrutiny and public skepticism at a high, the project was finally terminated in November 2023. Stakeholder groups such as the Participation Council, advocacy organizations, and some city council members expressed deep concerns that the system’s risks outweighed its intent, suggesting that algorithmic solutions were being sought for fundamentally policy-driven social issues. Critics argued that systemic social distrust and administrative complexity, rather than technological shortcomings, were the root problems. As the city reverted to its analog—yet still demonstrably biased—review process, the experiment exposed a stark reality: no amount of algorithmic transparency or model tweaking can substitute for genuine civic debate about what fairness means, who defines it, and when technology should be entrusted with vital human decisions. The sobering outcome in Amsterdam serves as a cautionary tale as more governments seek to integrate Artificial Intelligence into social welfare and public services.