Generative artificial intelligence models, especially large language models and multimodal systems, have sparked speculation that artificial general intelligence (AGI) is close at hand. Despite their impressive benchmarks, these models fundamentally rely on scale and vast data rather than true insight into human-like intelligence. The prevailing belief among some in the field is that piecing together large models across language, vision, and other modalities forms a pathway to AGI, but this article contends such multimodal strategies are destined to fall short of human-level generality. Real intelligence, it argues, must be grounded in embodied, interactive experience—capabilities that current modular, modality-specific approaches ignore.

Definitions of AGI that focus exclusively on generality via symbol manipulation, especially in language, miss vital domains such as sensorimotor reasoning, physical problem-solving, and social coordination. Many essential tasks—from repairing a car to preparing a meal—require an agent to develop and operate from a world model informed by physical interaction, not just language-like data. The article critiques the notion that language models form deep, general models of reality simply from next-token prediction. While tricks like learning sophisticated token-prediction heuristics can yield language outputs that seem world-aware, studies show this apparent knowledge often reflects memorized symbol patterns, not an understanding of the world. Famous results, such as language models´ performance with Othello game moves, do not generalize: real-world reasoning cannot be reduced to symbol manipulation alone. True world models must predict the state of the physical world, not just the sequence of symbols about it.

The article draws on linguistic distinctions between syntax, semantics, and pragmatics, showing that language models can produce syntactically correct language that is semantically or pragmatically absurd by human standards. This exposes how current large models may skirt genuine understanding by substituting brute-force memorization for flexible, reasoned comprehension. Further, the piece revisits ´the bitter lesson´ of artificial intelligence pioneered by Rich Sutton, noting that while scaling up computation has prompted stunning progress, the idea that all inductive biases or architectural assumptions should be discarded is misguided. Advances like convolutional neural networks and transformers resulted from human insight into structure. The multimodal approach to AGI, commonly conceived as stitching together experts on narrow modalities, actually encodes assumptions about how perception and action ought to be separated, missing the fact that human intelligence is a continuous, embodied process where such divisions blur.

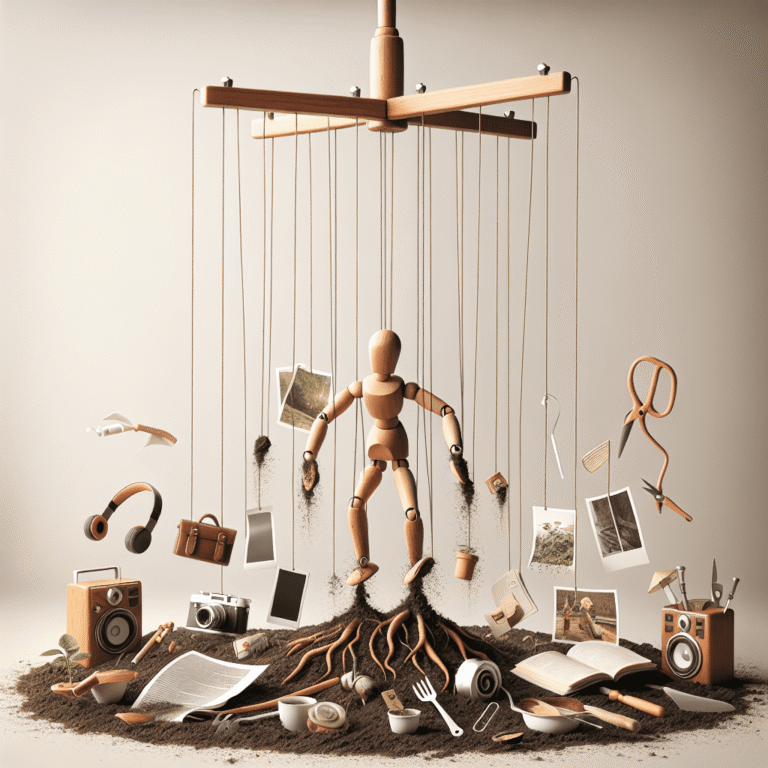

Multimodal models, for instance, force-fit different senses into separate modules, hoping that a shared embedding space can reconcile them. Yet this design often lacks the ability to synthesize concepts flexibly across modalities, as happens naturally with human cognition. The solution, according to the article, would be to let modality-specific processing emerge from interactive experience rather than enforcing it via data or architecture. Such models would process text, images, and actions through unified perception and output systems, losing some efficiency but gaining increased cognitive flexibility and potential for true generalization. The challenge of AGI is less mathematical—universal approximators exist—and more a matter of figuring out the right functional organization for the capabilities required. The article concludes that if the field continues optimizing models for narrow tasks with scale maximalism, true AGI will remain elusive.