The European Commission´s executive vice president, Henna Virkkunen, has indicated that the European Union may postpone components of its landmark Artificial Intelligence Act, citing delays in establishing essential standards and guidelines. Speaking at a gathering of EU digital ministers in Luxembourg, Virkkunen stressed that if these critical implementation materials are not ready, postponing parts of the Act should remain an option. The Artificial Intelligence Act, agreed in late 2023, was heralded as the world´s first comprehensive framework to govern risks posed by artificial intelligence technologies. Early bans and restrictions entered into force in February, and further key requirements are scheduled for phased introduction throughout 2025 and 2026.

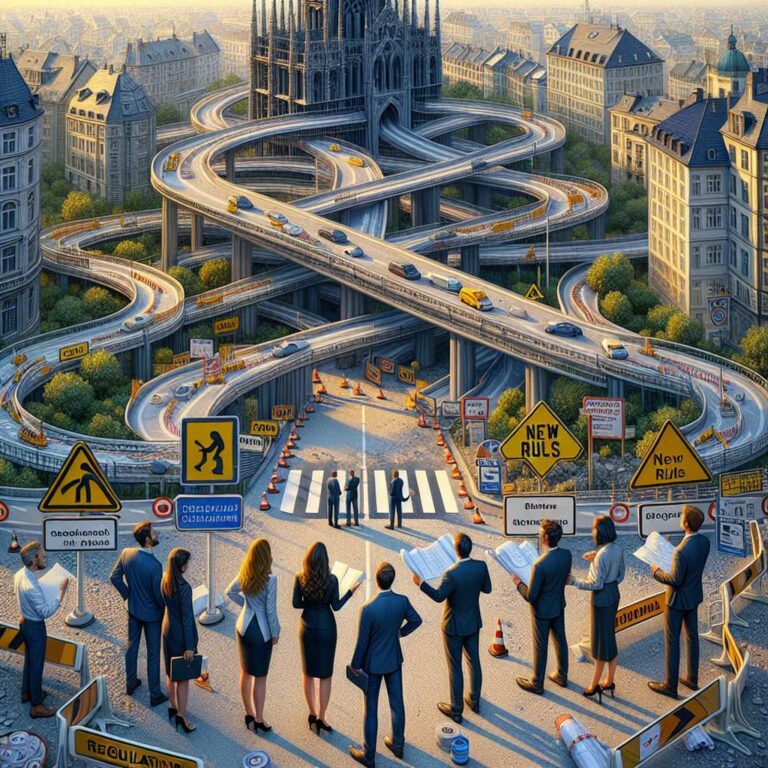

However, the regulatory rollout has hit significant turbulence. Industry players and companies bound by the new law have voiced concerns over a lack of specific technical guidance and finalized standards, which are essential for compliance. Lobbying intensified following the U.S. presidential election of Donald Trump, with calls from both American officials and business leaders to delay or clarify rules. A central code of practice, pivotal for companies developing complex artificial intelligence models set to face new obligations in August, remains incomplete. As a result, both industry stakeholders and some EU member states have advocated for a ´stop-the-clock´ mechanism, pausing the law’s application should regulatory clarity not be achieved in time.

At the Luxembourg ministerial, multiple EU officials expressed openness to temporary delays. Poland’s junior digital minister, Dariusz Standerski, told POLITICO that industry requests for a postponement are ´reasonable,´ but insisted any additional delay should include a concrete action plan. Standerski also noted ongoing efforts to simplify the law without merely cutting regulations, pointing to the need for impact assessments and lower costs for businesses. The debate reflects a broader EU review of its approach to technological regulation, seeking to balance innovation incentives against the enforcement of safeguards aimed at minimizing harmful societal effects from artificial intelligence systems.