Artificial intelligence has rapidly become a transformative force in industry and society, triggering a wave of investments and regulatory initiatives. In response to soaring interest and market potential, the European Commission unveiled the InvestAI initiative, targeting €200 billion toward Artificial Intelligence infrastructure, alongside major private sector investments led by industry giants such as OpenAI and Oracle. While the potential benefits of Artificial Intelligence are vast, lawmakers globally are moving to address the technology´s dual-use risks, placing both regulatory scrutiny and antitrust enforcement firmly in the spotlight.

The centerpiece of Europe’s approach is the EU Artificial Intelligence Act, adopted in June 2024. This landmark regulation creates a risk-based framework that obliges providers, distributors, manufacturers, and deployers—both within and beyond the EU—to comply with strict rules contingent on an Artificial Intelligence system´s risk profile. Outright prohibitions now apply to technologies posing ´unacceptable risks´ to fundamental rights, with fines reaching up to €35 million or 7% of global turnover. High-risk systems face conformity assessments and exhaustive compliance requirements, while systems carrying limited risk must ensure transparency to users—for example, disclosing artificial content or chatbots. The regulation also singles out general-purpose models with systemic impact for ongoing risk management and reporting duties. Copyright, data protection, and product liability rules have similarly expanded to address Artificial Intelligence, requiring extensive documentation and labelling, and clarifying consumer rights across software and generated digital content.

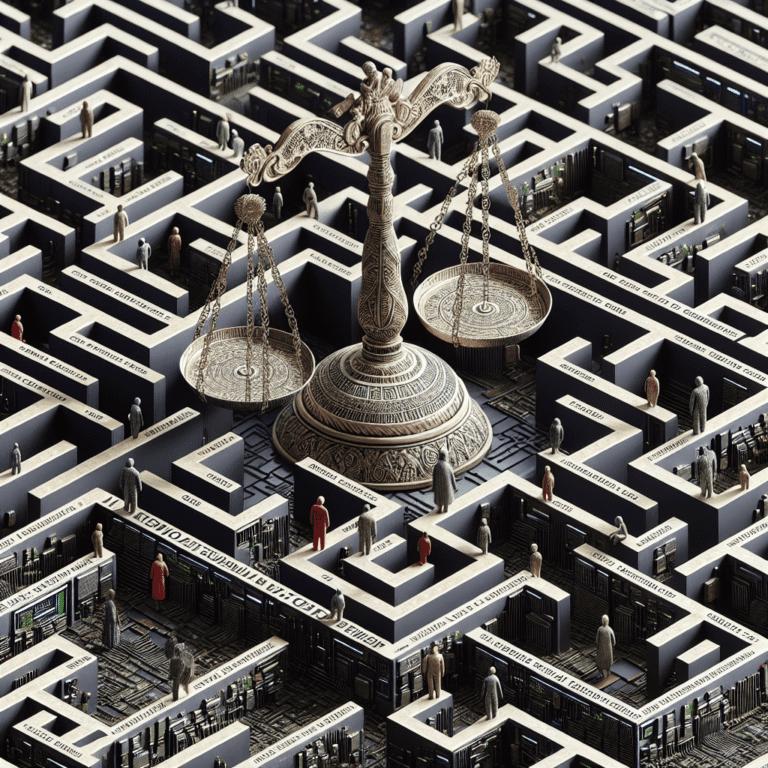

Complementing the Artificial Intelligence Act, the European Commission may leverage the Digital Markets Act (DMA) and Digital Services Act (DSA) to regulate Artificial Intelligence embedded in core digital services. Legislative and enforcement measures now include monitoring large platforms for systemic risks and investigating partnerships among dominant industry players, particularly where exclusivity arrangements or preferential access to critical infrastructure such as computing power or high-quality data could entrench market power. The EU, in concert with international agencies, has signaled a readiness to scrutinize vertical integration, strategic alliances, and employment practices that may stifle rivalry or hamper competitive entry. Notably, the Commission is paying close attention to the integration of Artificial Intelligence in consumer devices and foundational technologies, as well as heightened competition issues surrounding access to specialist chips, cloud services, and technical talent.

Despite the ambition of the EU Artificial Intelligence Act and related digital market rules, concerns abound regarding compliance complexity, enforcement consistency, and the possibility of inadvertently stifling innovation or market entry, particularly for SMEs and non-EU firms. Content creators and rights-holders have criticized perceived gaps in protective measures, especially in terms of copyright and creative industry impact. Regulatory and antitrust authorities are thus urged to balance rigorous oversight with proportionality, so as not to handicap the very technological progress regulators seek to guide, as the legal landscape for Artificial Intelligence continues to evolve on both sides of the Atlantic.