Artificial Intelligence is rapidly transforming content creation, blurring the lines between text authored by humans and that generated by software like ChatGPT and Jasper. This growing ambiguity has led to the emergence of AI content detectors—tools created to differentiate between human-written and AI-generated content. Their use is widespread in blogging, academic submissions, and digital marketing, where authenticity is crucial for credibility and compliance with industry standards.

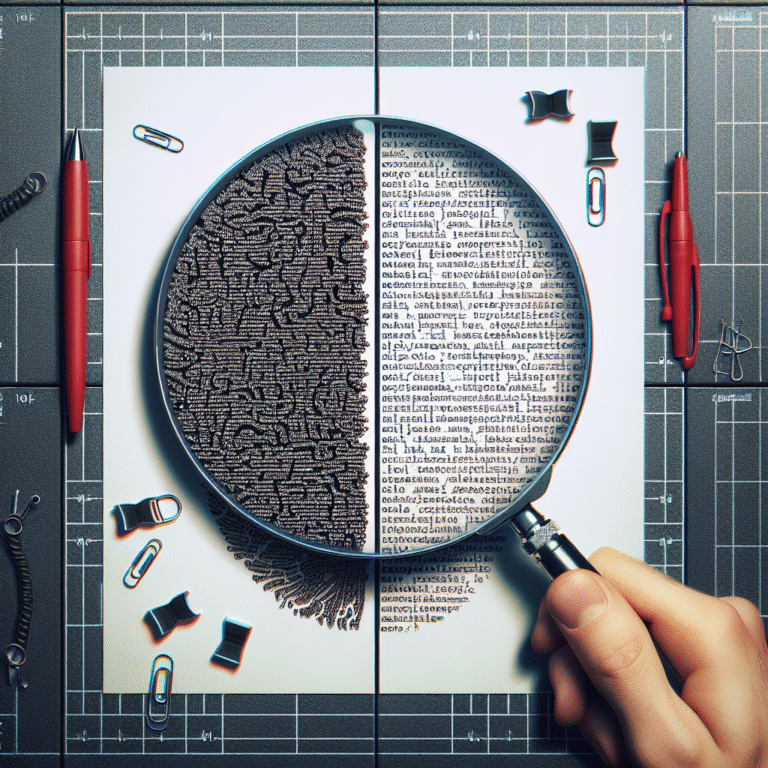

AI content detectors function by analyzing linguistic patterns in submitted text. Key metrics include perplexity, which assesses the predictability of word choices, and burstiness, which examines sentence length and complexity variance. Human writing typically showcases greater unpredictability and varied rhythm, while AI-generated text often appears more uniform and polished. Popular detectors such as Originality.ai, GPTZero, Copyleaks AI Detector, and Writer.com’s AI Content Detector have become common tools in vetting content for integrity, especially where ranking, originality, or honesty are at stake.

Despite their utility, AI detectors are far from infallible. They often correctly flag templated writing but can misidentify up to 20% of human-written texts—especially from non-native English speakers—as artificial intelligence outputs. As AI models evolve and better mimic genuine writing, detection accuracy diminishes, raising ethical issues and practical challenges. For SEO, search engines demand quality and value regardless of content origin, penalizing low-value, spammy AI-generated articles. In education, reliance on these detectors can threaten academic integrity, but false positives have serious consequences. Similarly, undisclosed AI-generated articles risk damaging the credibility of businesses and media outlets. Experts recommend pairing AI content detection with human oversight and contextual analysis for the highest trust and accuracy, using collaboration platforms like EasyContent to streamline review workflows. The landscape of content verification is evolving alongside artificial intelligence, necessitating ongoing vigilance, transparency, and ethical best practices.