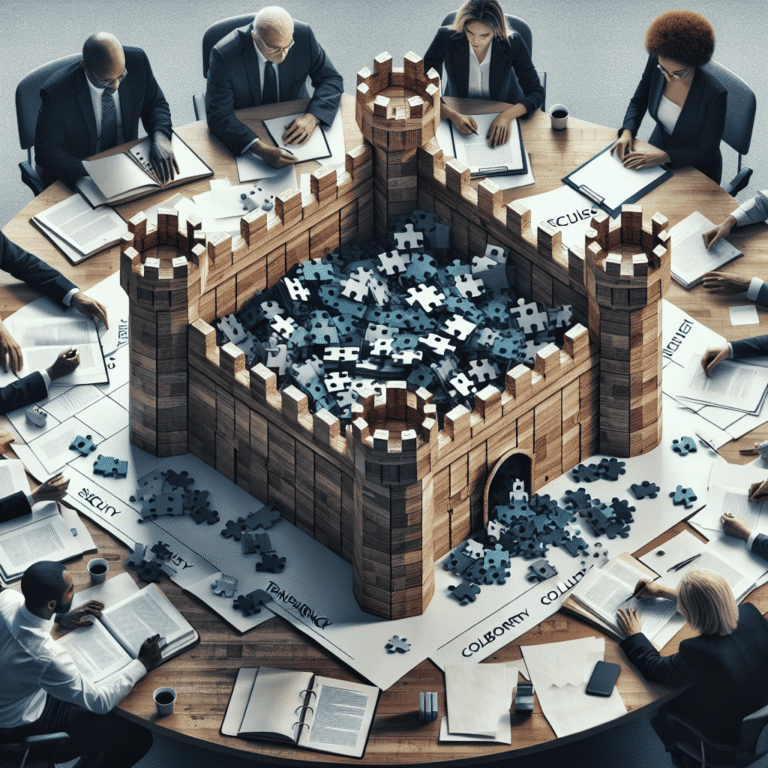

Hugging Face has introduced a dedicated leaderboard focused on the security evaluation of large language models, aiming to fill a critical gap in the benchmarking of Artificial Intelligence systems. By providing a transparent, open source platform, the initiative enables researchers, developers, and organizations to assess how various models withstand security challenges and adversarial threats.

The leaderboard aggregates and standardizes results covering a range of vulnerability tests, including prompt injection, data poisoning, jailbreaking, and other attack vectors commonly affecting large language models. This collaborative approach invites contributions from the wider research community, helping to establish best practices and expose potential weaknesses before models are deployed in sensitive or high-stakes environments.

Through this new resource, Hugging Face continues its commitment to openness and accountability in Artificial Intelligence development, fostering an ecosystem that prioritizes safety alongside innovation. The open source nature of the leaderboard ensures that industry benchmarks remain accessible, reproducible, and relevant as new threats and mitigation techniques emerge.