Despite widespread awareness of agentic artificial intelligence among chief financial officers, enthusiasm for deploying these systems remains low. A July 2025 PYMNTS Intelligence report revealed that only 15% of surveyed CFOs are interested in integrating agentic AI into their organizations, reflecting ongoing skepticism about its readiness, return on investment, and business value. While the technology is often lauded for its potential to automate complex workflows and enhance decision-making, finance leaders express concern over unforeseen risks, lack of transparency, and the challenge of quantifying clear benefits.

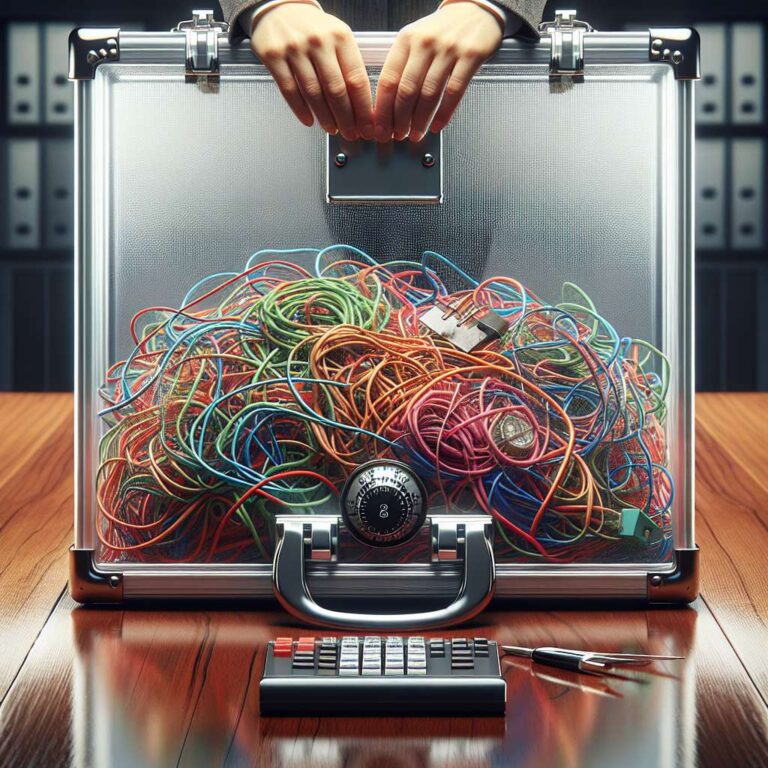

Trust stands as the chief obstacle to adoption. CFOs demand robust safeguards before relinquishing critical decisions to autonomous agents. Key requirements include traceable outputs that reveal the logic behind agentic conclusions, human-in-the-loop controls for real-time intervention on high-impact decisions, and built-in systems to monitor and correct bias. Without transparent mechanisms and granular oversight, financial leaders are unwilling to allow agentic artificial intelligence to operate independently within compliance-driven environments. They stress the need for usable paper trails, the ability to revert automated actions, and reassurances against unpredictable system behavior that could endanger business operations or reputational standing.

Other sectors display more optimism, yet also report uneven results. In professional services, 83% of firms are deploying or planning to deploy agentic artificial intelligence, but almost a third find the technology falls short—blaming a lack of internal expertise and fragmented data infrastructure. Technical and operational barriers are even starker in finance, where agentic systems must interface with legacy applications, resource planning tools, and compliance platforms. The surge in network traffic generated by artificial intelligence workloads outpaces traditional monitoring, limiting real-time visibility and jeopardizing security. Encrypted and siloed authentication further complicate oversight, making fraud detection and data protection harder as the risk of exploit grows.

Cultural resistance compounds the challenge. Executives worry that if agentic systems resemble inscrutable black boxes, real users will struggle to trust and adopt them. Especially in sensitive sectors such as payments, agentic artificial intelligence raises alarms about fraud, abuse of permissions, and inadequate authentication measures. Experts anticipate that practical deployment will require tailoring agents to specific industries, ensuring domain-appropriate interfaces and logic, rather than one-size-fits-all solutions. Until transparency, security, and measurable value are proven, agentic artificial intelligence will remain a boardroom buzzword rather than an enterprise reality.