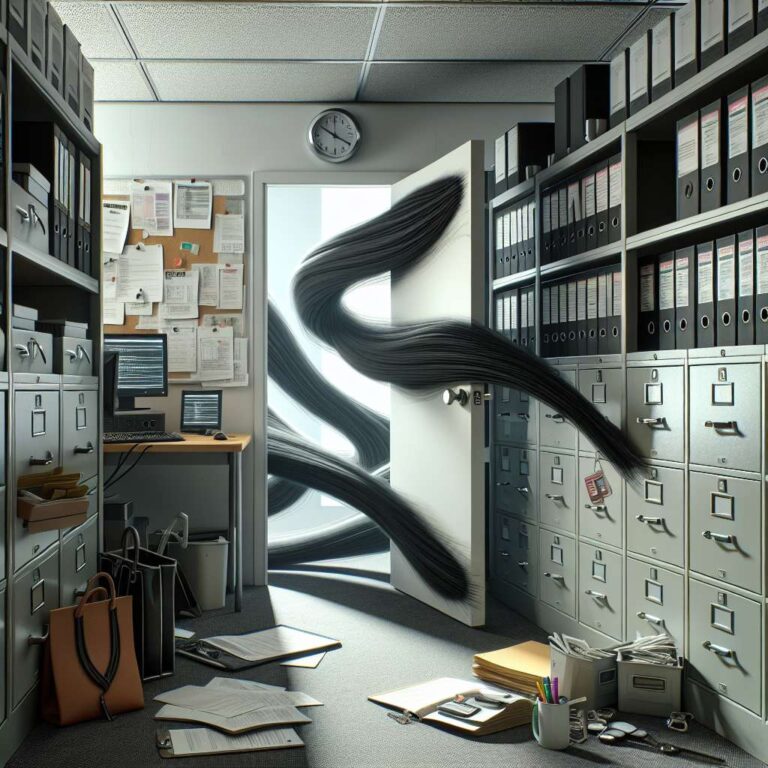

Shadow Artificial Intelligence—tools deployed in organizations without proper oversight—are intensifying both the risk and cost of data breaches, according to the latest IBM Cost of Data Breach report. The study highlights that one in five organizations surveyed suffered a cyberattack linked directly to shadow Artificial Intelligence, with those breaches averaging significantly higher costs than incidents at firms with minimal or no shadow Artificial Intelligence deployment. Notably, only 13% of respondents reported breaches involving Artificial Intelligence systems, but a staggering 97% of these organizations lacked adequate access controls for their Artificial Intelligence tools.

The research exposes the widespread absence of robust security and governance as businesses race to adopt emerging Artificial Intelligence platforms. IBM’s findings indicate that lax authentication remains a leading vulnerability, with many attacks originating through supply-chain vectors, such as compromised applications, APIs, or plug-ins. Once hackers penetrate an Artificial Intelligence tool, subsequent breaches commonly extend to other organizational data stores—occurring in 60% of related incidents—and sometimes also disrupt operations or critical infrastructure, seen in about 31% of cases. The report singles out fundamental security measures like zero-trust architectures and network segmentation as essential yet often neglected defenses.

Governance around Artificial Intelligence remains weak. Despite mounting evidence that deliberate oversight can curb costs and limit exposure, 63% of affected organizations admitted to having no formal Artificial Intelligence governance policy at the time of a data breach. Even among those with some governance framework, almost half lacked approval processes for Artificial Intelligence deployments, and 62% failed to enforce proper access restrictions. Only a third of these organizations routinely audited their networks for unsanctioned Artificial Intelligence tools, underscoring the persistence of shadow Artificial Intelligence vulnerabilities. Meanwhile, the threat landscape is evolving: 16% of breaches overall involved attackers leveraging Artificial Intelligence, primarily for tasks like phishing and deepfake impersonation, with generative Artificial Intelligence dramatically accelerating the crafting of deceptive messages—from hours to mere minutes. IBM’s insights are derived from 470 interviews at 600 organizations that experienced data breaches between March 2024 and February 2025, painting a sobering picture of the urgent, unaddressed risks posed by unmanaged enterprise Artificial Intelligence adoption.