As digital platforms grapple with a growing tide of disinformation and harmful user content, organizations are increasingly turning to Artificial Intelligence content moderation systems to scale their response. Traditionally, human moderators reviewed content for appropriateness, a process that was slow, labor-intensive, and susceptible to subjectivity and delays. With the exponential growth in user-generated and Artificial Intelligence-generated content, manual moderation alone can no longer keep pace, leading to adoption of automated and hybrid systems combining Artificial Intelligence and human judgment for greater speed and accuracy.

There are six main methods organizations use for Artificial Intelligence content moderation: pre-moderation, post-moderation, reactive moderation, distributed moderation, user-only moderation, and hybrid moderation. Pre-moderation employs tools like natural language processing to screen content prior to publication, automatically rejecting or flagging content that violates blocklists or community guidelines. Post-moderation allows real-time posting, with subsequent Artificial Intelligence or human review to flag or remove violating content. Reactive and distributed moderation crowdsource decision-making to users, enabling communities to report and vote on posts, while Artificial Intelligence can prioritize reports and identify manipulation or bias. User-only moderation empowers registered users with filtering capabilities and limited oversight, with Artificial Intelligence learning from user interactions. Hybrid moderation blends Artificial Intelligence´s rapid detection and pre-filtering with human oversight, ensuring final judgment on nuanced or context-dependent cases and credibility in output.

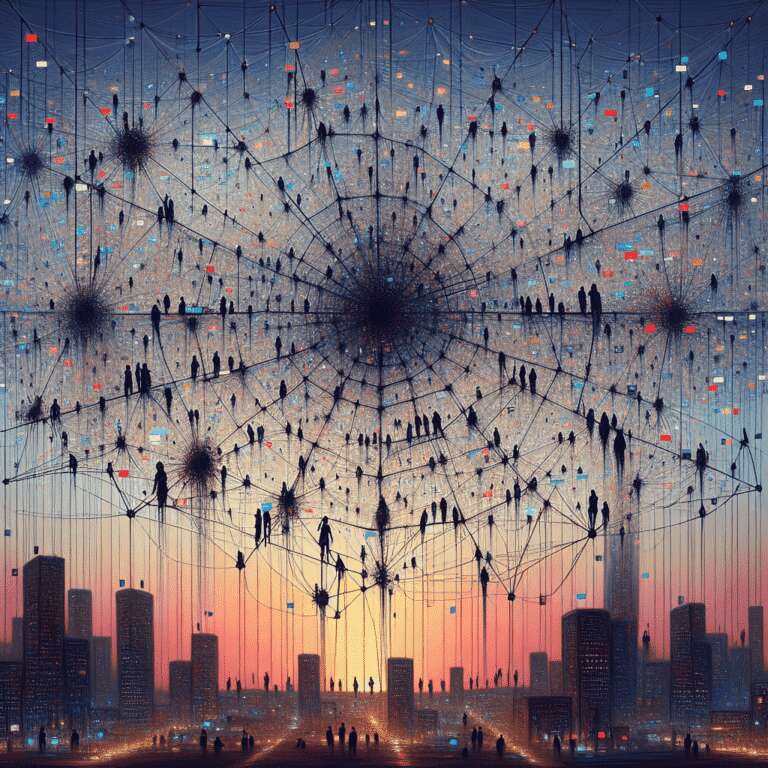

Artificial Intelligence content moderation relies on advanced models incorporating natural language processing, machine learning, and computer vision to detect rule violations across text, images, audio, and video. These tools are essential as generative Artificial Intelligence enables massive amounts of new, contextually complex content that rivals human-generated material and can evade traditional filters. Leading platforms like Meta, YouTube, and TikTok leverage multimodal Artificial Intelligence and deep learning to recognize sarcasm, cultural nuance, memes, and more. As organizations strive to balance scale and accuracy, the shift to Artificial Intelligence-driven moderation is accelerating, seen in moves like TikTok´s replacement of hundreds of human moderators with Artificial Intelligence systems. With the rapid evolution of generative Artificial Intelligence, flexible, adaptive moderation strategies are becoming business imperatives, blending automation, user input, and human oversight to keep digital spaces safe and compliant.