GeForce NOW brings RTX 5080-class power and Artificial Intelligence upgrades

NVIDIA will upgrade GeForce NOW to the Blackwell RTX architecture in September, delivering GeForce RTX 5080-class performance, next-generation Artificial Intelligence features and a new Install-to-Play capability that expands the cloud library to nearly 4,500 titles.

Think SMART: How to optimize artificial intelligence factory inference performance

The Think SMART framework outlines how to optimize artificial intelligence inference at scale by balancing workload complexity, multidimensional performance and ecosystem considerations. The article highlights architecture, software and return-on-investment levers that AI factories can use to maximize tokens per watt and cost efficiency.

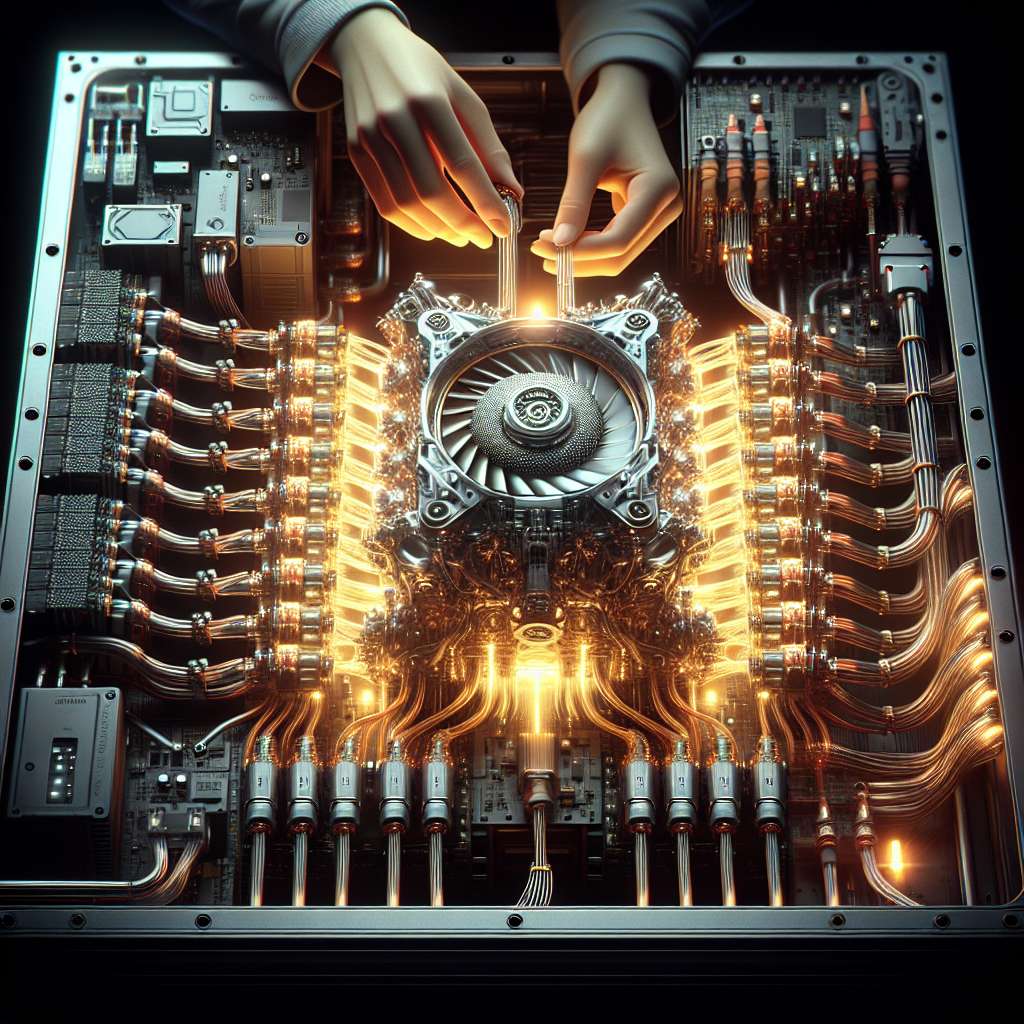

Gearing up for the gigawatt artificial intelligence data center age

Artificial intelligence factories are shifting data center design from web-serving to GPU‑dense facilities that require new networking and cooling approaches. NVIDIA presents NVLink, Quantum InfiniBand and Spectrum‑X as a layered strategy to scale GPUs inside racks and across clusters.

Applicability vs. job displacement: clarifying our findings on Artificial Intelligence and occupations

Microsoft Research clarifies that its study measured where Artificial Intelligence chatbots may be useful across occupations and explicitly did not conclude that jobs will be eliminated.

Inside Ukraine’s largest Starlink repair shop

A volunteer workshop in Lviv has become possibly the largest unofficial repair hub for Starlink terminals supporting Ukraine’s military, repairing or modifying thousands of units since 2022.

Why recycling isn’t enough to address the plastic problem

Negotiators at UN talks failed to agree on a binding plastic treaty after disputes over production limits. The article argues that recycling alone cannot tackle plastic’s pollution and climate impacts.

AIContentHub: How artificial intelligence boosts effective social media content creation

AIContentHub uses artificial intelligence to speed ideation, ensure brand consistency, and optimize posts across platforms. The platform combines trend analysis, content generation, scheduling, and analytics to help brands scale social media output.

Google releases per-prompt energy data for Gemini artificial intelligence

Google published a technical report estimating the energy, water and carbon footprint of a text prompt to its Gemini artificial intelligence, with a median prompt using 0.24 watt-hours of electricity. The report provides a detailed breakdown of how that figure was calculated and highlights limits to its scope.

IBM and NASA launch Surya Artificial Intelligence model to predict solar weather

IBM and NASA unveiled Surya, an open-source Artificial Intelligence model trained on nine years of high-resolution data to forecast solar flares. The model improves solar flare classification accuracy by 16% and is available on Hugging Face and GitHub.

Ionstream.ai offers NVIDIA B200 bare metal for Artificial Intelligence workloads

Ionstream.ai is offering NVIDIA HGX B200 bare metal through its GPU-as-a-Service platform for Artificial Intelligence workloads. The article lists an ambiguous hourly rate token (´?.50 per hour´) for one-month contracts and says lower pricing is available for longer commitments; the precise hourly price is not stated.